Demo Video

Example Code

Copy

import os

from athina.evals import DoesResponseAnswerQuery, ContextContainsEnoughInformation, Faithfulness

from athina.loaders import Loader

from athina.keys import AthinaApiKey, OpenAiApiKey

from athina.runner.run import EvalRunner

from athina.datasets import yc_query_mini

import pandas as pd

from dotenv import load_dotenv

load_dotenv()

# Configure an API key.

OpenAiApiKey.set_key(os.getenv('OPENAI_API_KEY'))

# Load the dataset

dataset = [

{

"query": "query_string",

"context": ["chunk_1", "chunk_2"],

"response": "llm_generated_response_string",

"expected_response": "ground truth (optional)",

},

{ ... },

{ ... },

{ ... },

]

# Evaluate a dataset across a suite of eval criteria

EvalRunner.run_suite(

evals=[

RagasAnswerCorrectness(),

RagasContextPrecision(),

RagasContextRelevancy(),

RagasContextRecall(),

RagasFaithfulness(),

ResponseFaithfulness(),

Groundedness(),

ContextSufficiency(),

],

data=dataset,

max_parallel_evals=10

)

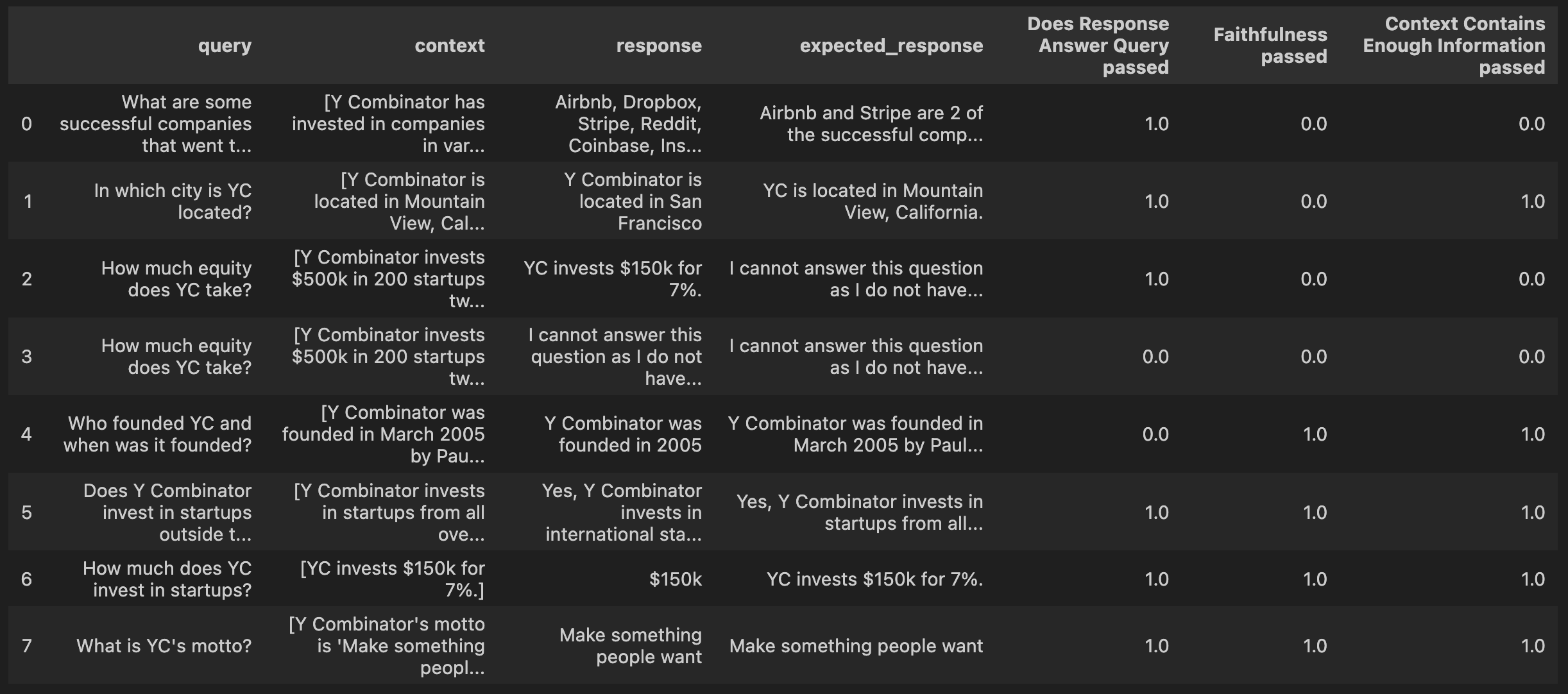

Response Format