Steps

Step 1: Install Required Libraries

Step 2: Configure AWS S3 Credentials

Step 3: Retrieve Data from S3 and Load into Pandas

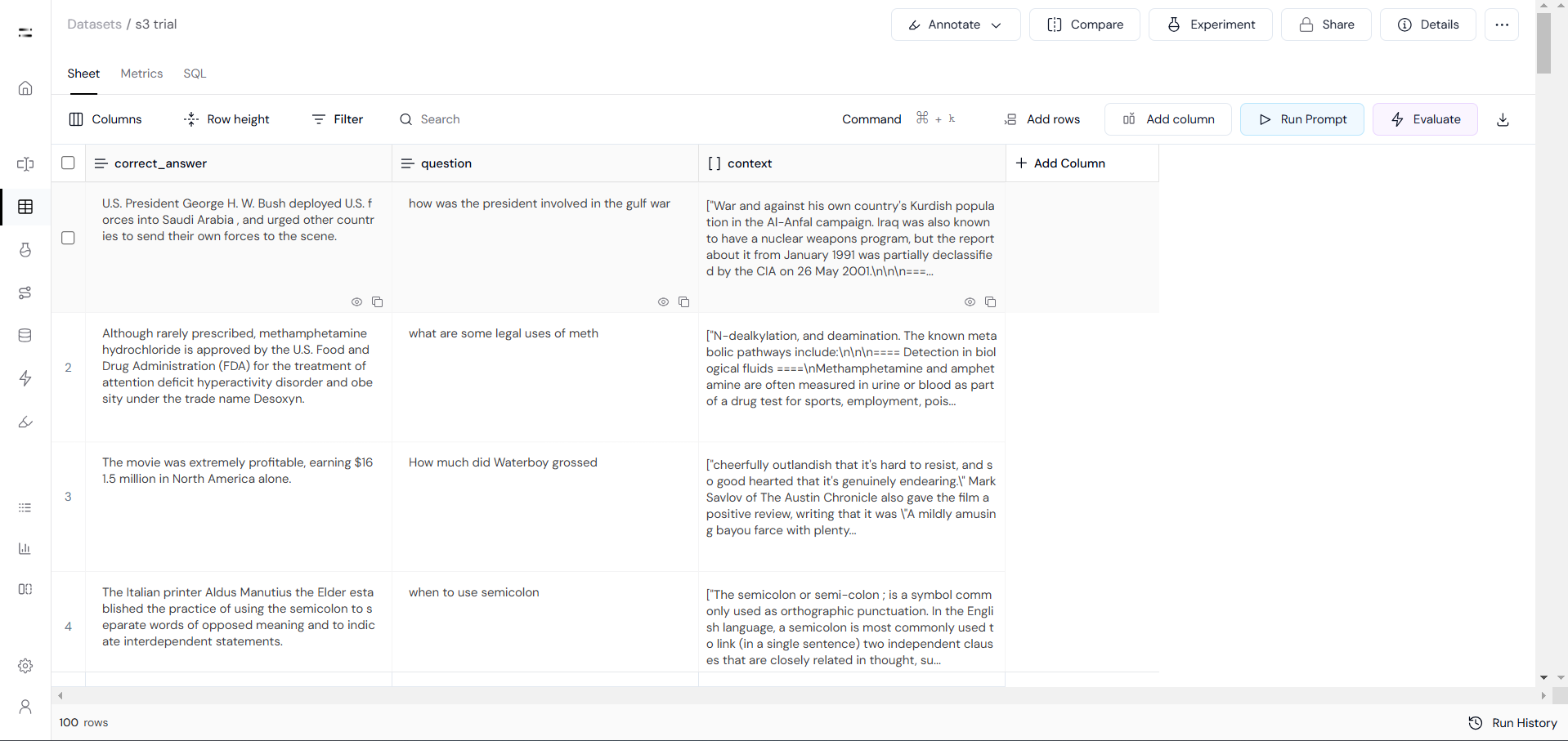

Step 4: Upload Data to Athina IDE

To upload the retrieved data into Athina IDE, follow these steps:

- Set up the Athina API key

- Convert the DataFrame into a format suitable for Athina IDE

- Upload the dataset using

Dataset.add_rows()