Demo Stage: The Inspect Workflow 🔎

Manual Inspect Worklow- Run prompt on single datapoint

- Inspect the response manually

- Change prompt / datapoint and repeat

MVP Stage: The Eyeball Workflow 👁️👁️

This workflow is similar to the previous workflow, but instead of running 1 datapoint at a time, you are running many datapoints together. However, you still don’t have ground truth data (the ideal response by the LLM) so there’s nothing to compare against. Eyeball Worklow - Run prompt on dataset with multiple datapoints - Put outputs onto a spreadsheet / CSV - Manually review (eyeball) the responses for each - Repeat This worklow is fine pre-MVP, but is not great for iteration.Iteration Stage: The Golden Dataset Workflow 🌟🌟

You now have a golden dataset with your datapoints, and ideal responses. You can now set up some basic evals. Great! Now you actually have a way to improve performance systematically. The workflow looks something like this Iteration Worklow - Create golden dataset (multiple datapoints with expected responses) - Run prompt on test dataset - Option 1: Manual Review - Put outputs onto a spreadsheet / CSV - Manually compare LLM responses against expected responses - Option 2: Evaluators - Create evaluators to compare LLM response against expected response - But what metrics to use? How to compare 2 pieces of unstructured text? - Build internal tooling to: - run these evaluators, and score them - track history of runs - a UI This is actually a good workflow for all stages.⛭ Enter the Athina Worklow… 🪄

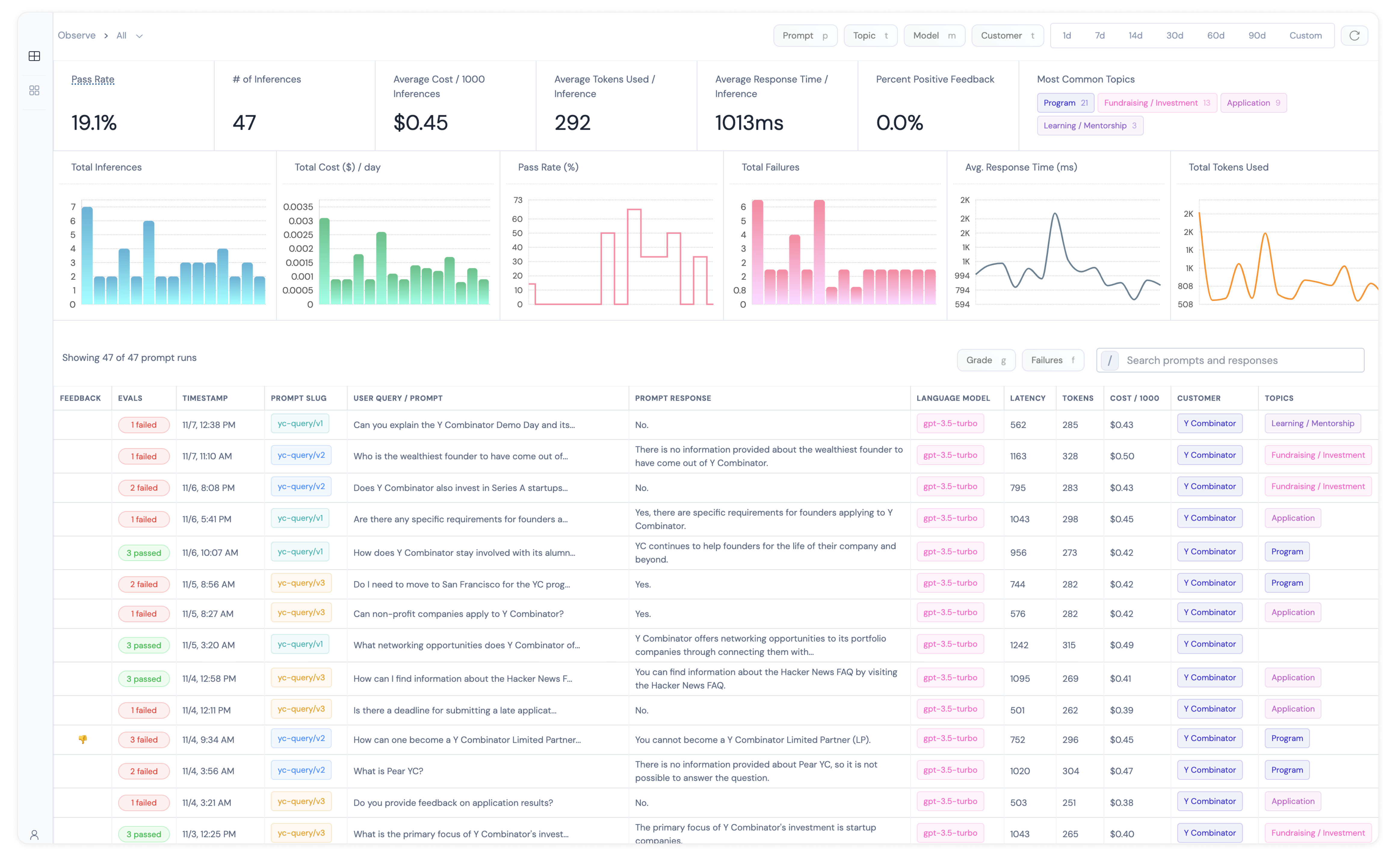

Athina’s workflow is designed for users at any stage of the AI product development lifecycle. Athina Monitor: Demo / MVP / Production Stage Setup time: < 5 mins- Run your inferences, and log data to Athina Monitor.

- View the results on a dashboard.

- Preserve historical data including prompt, response, cost, token usage and latency (+ more)

- UI to manually grade your responses with 👍 / 👎

Athina Evaluate: Development / Iteration Stage

Setup time: 2 mins

Now that you’re really trying to focus on improving model performance, here’s how you can do it:

Athina Evaluate: Development / Iteration Stage

Setup time: 2 mins

Now that you’re really trying to focus on improving model performance, here’s how you can do it:

- Configure experiments and run evaluations programmatically

- Run preset evals or create your own custom eval

- Eval results are automatically logged to Athina Develop

- Works in a python notebook – but you can also view the results on a dashboard.

- Also preserves historical data including prompt, response, datapoints, eval metrics (+ more)