Introduction

Large Language Models (LLMs) are useful for many tasks like text summarization, question answering, and solving domain-specific problems. However, selecting the best model for a specific use case can be challenging due to differences in performance, inference costs, and efficiency. A poor choice can lead to inefficiency and higher expenses, while the right model ensures reliability and cost-effectiveness. This guide provides a step-by-step approach to help you compare and evaluate multiple LLMs for your use case using Athina AI’s Experiments.

Let’s first start by understanding the important criteria for comparing Large Language Models (LLMs).

Criteria to Judge LLMs

When selecting an LLM, it’s important to evaluate models against clear performance criteria to ensure they align with your requirements. Here are the three primary criteria to consider:1. Latency

Latency refers to the time it takes for a model to generate a response. For time-sensitive applications, lower latency is critical to ensure a seamless user experience. Compare the latency of models under similar conditions to find the most efficient option.2. Token Usage

Token usage refers to the number of tokens processed in a single request, including input and output tokens. Since many providers charge per token, minimizing usage is key for cost efficiency, especially in large-scale applications. Compare token usage across models for the same tasks, and prioritize those that provide accurate results while keeping token consumption low.In Athina, Prompt Tokens refer to input tokens, and Completion Tokens refer to output tokens.

3. Evaluation Metrics

Evaluation metrics assess how well a model performs on specific tasks, helping you determine its overall quality and suitability for your needs. Depending on the use case, you can use the following:- Answer Completeness: Ensures the response fully answers the user’s query without missing critical details.

- Context Sufficiency: Evaluates if the context provided contains enough information to support the generated answer.

- Faithfulness: Measures the factual consistency of the generated answer against the given context.

These are just a few of the many evaluation metrics that Athina offers. Check out the Athina Evaluations Documentation for a complete list and more detailed information. You can also create custom evaluation metrics as per your needs.

Dataset

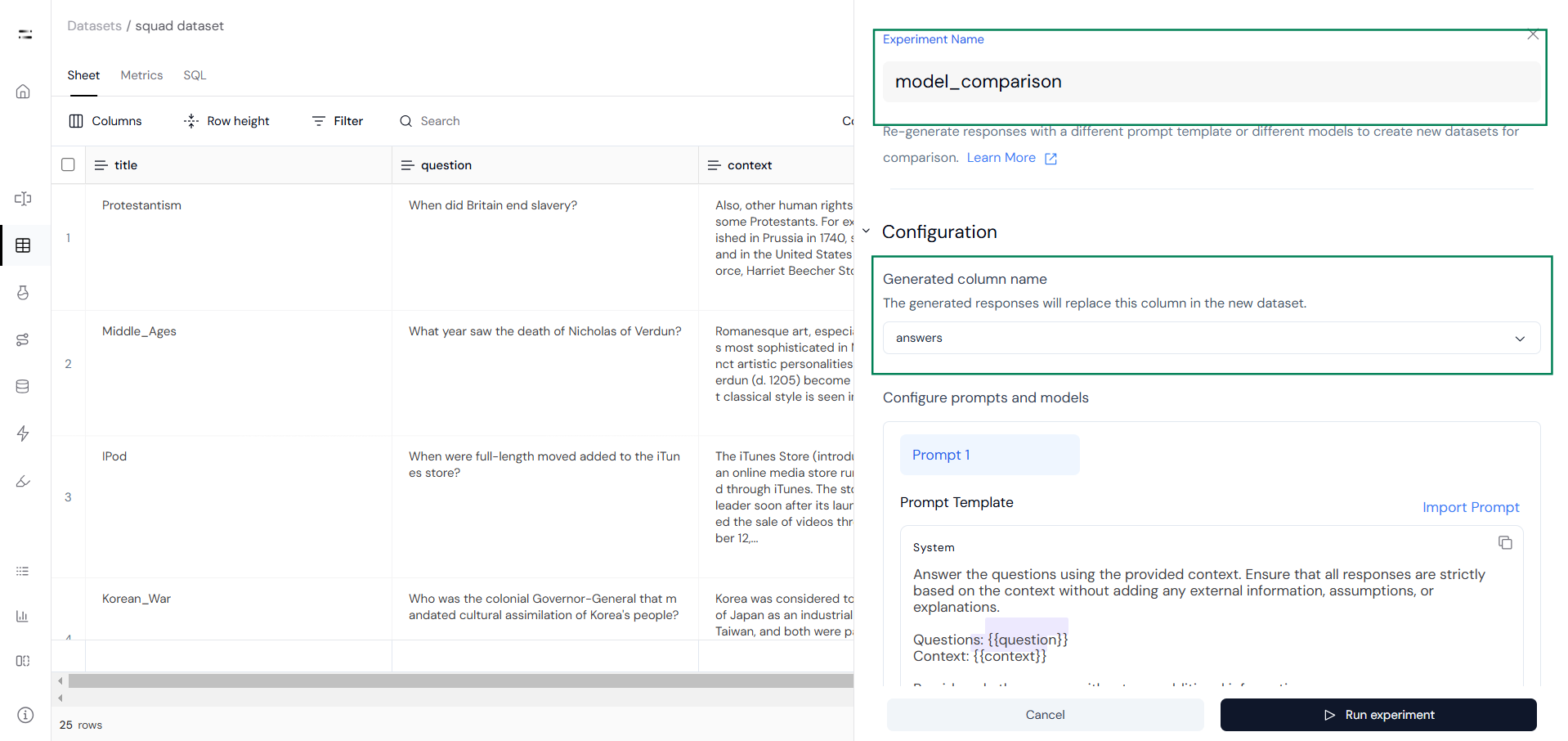

In this guide, we will compare multiple models using the Stanford Question Answering Dataset (SQuAD) and Athina. This dataset is widely used for Q&A tasks, as it pairs questions with context passages from Wikipedia, where the answers can be extracted.Comparing Multiple Models in Athina

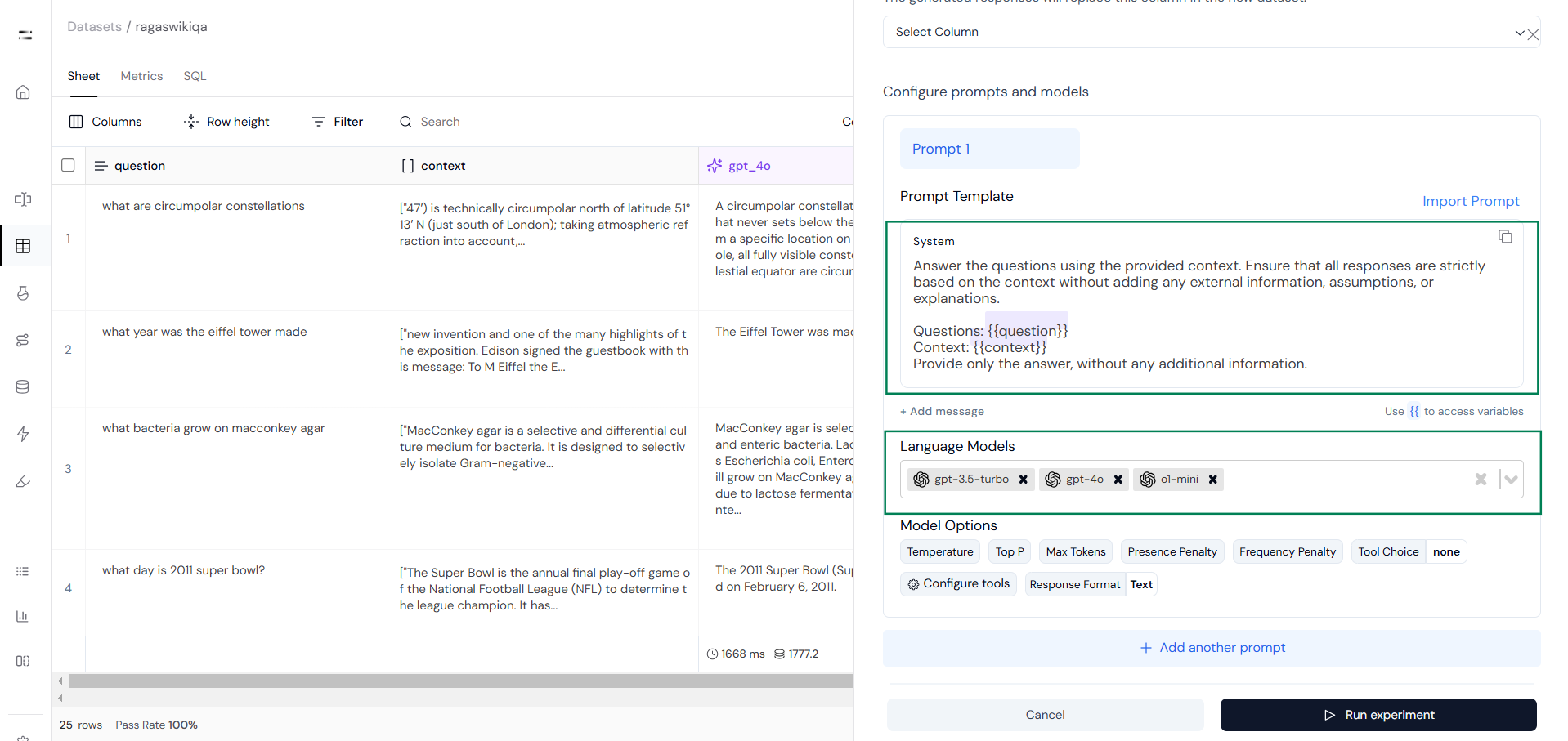

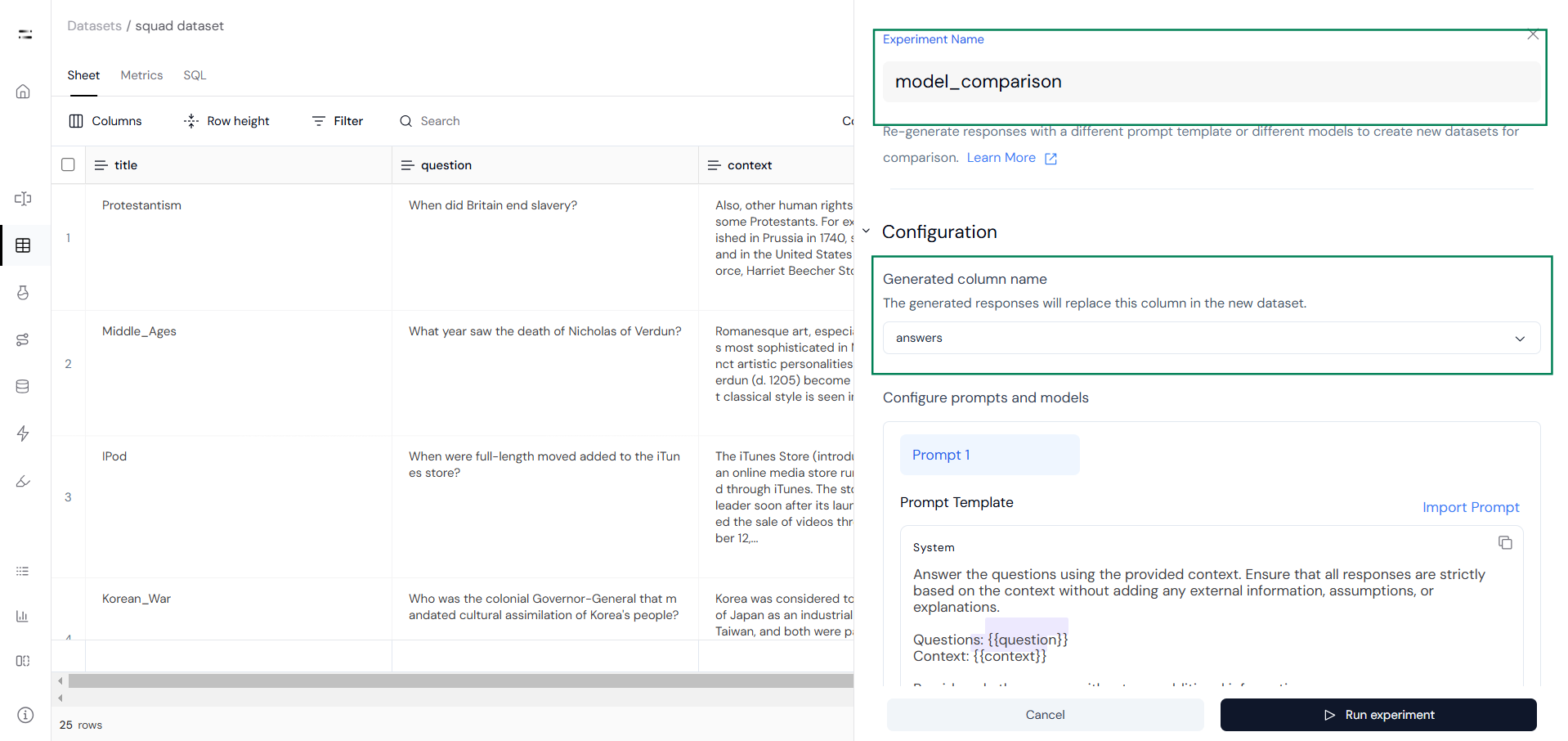

In this example, we will test how well models generate concise and accurate answers to questions based on specific contexts. We will also evaluate the latency and the number of tokens each model consumes to generate the response.Step 1: Create Experiments

Start by creating an experiment. Add a name for your experiment, select the relevant column name,

and input the prompt.

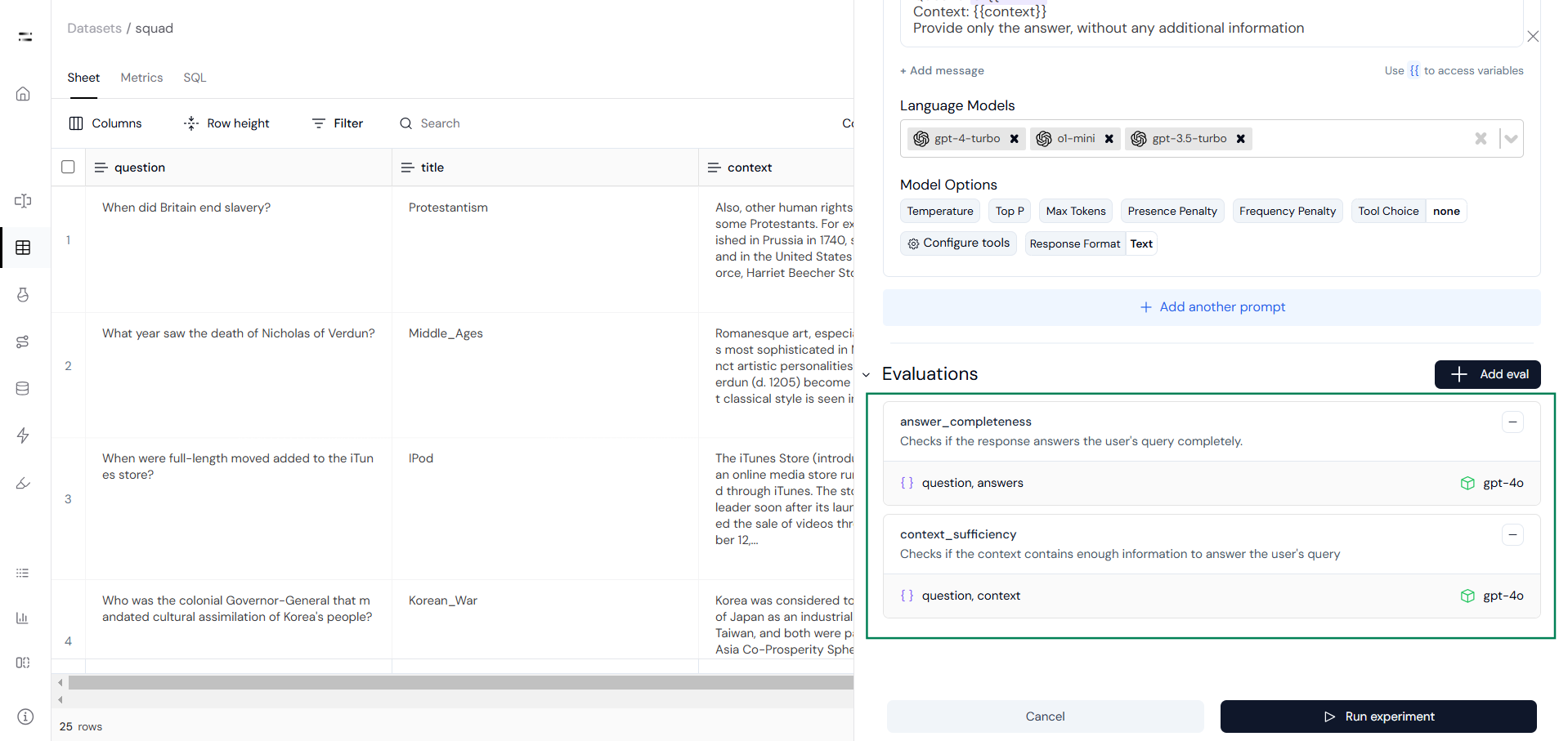

Step 2: Add Evals

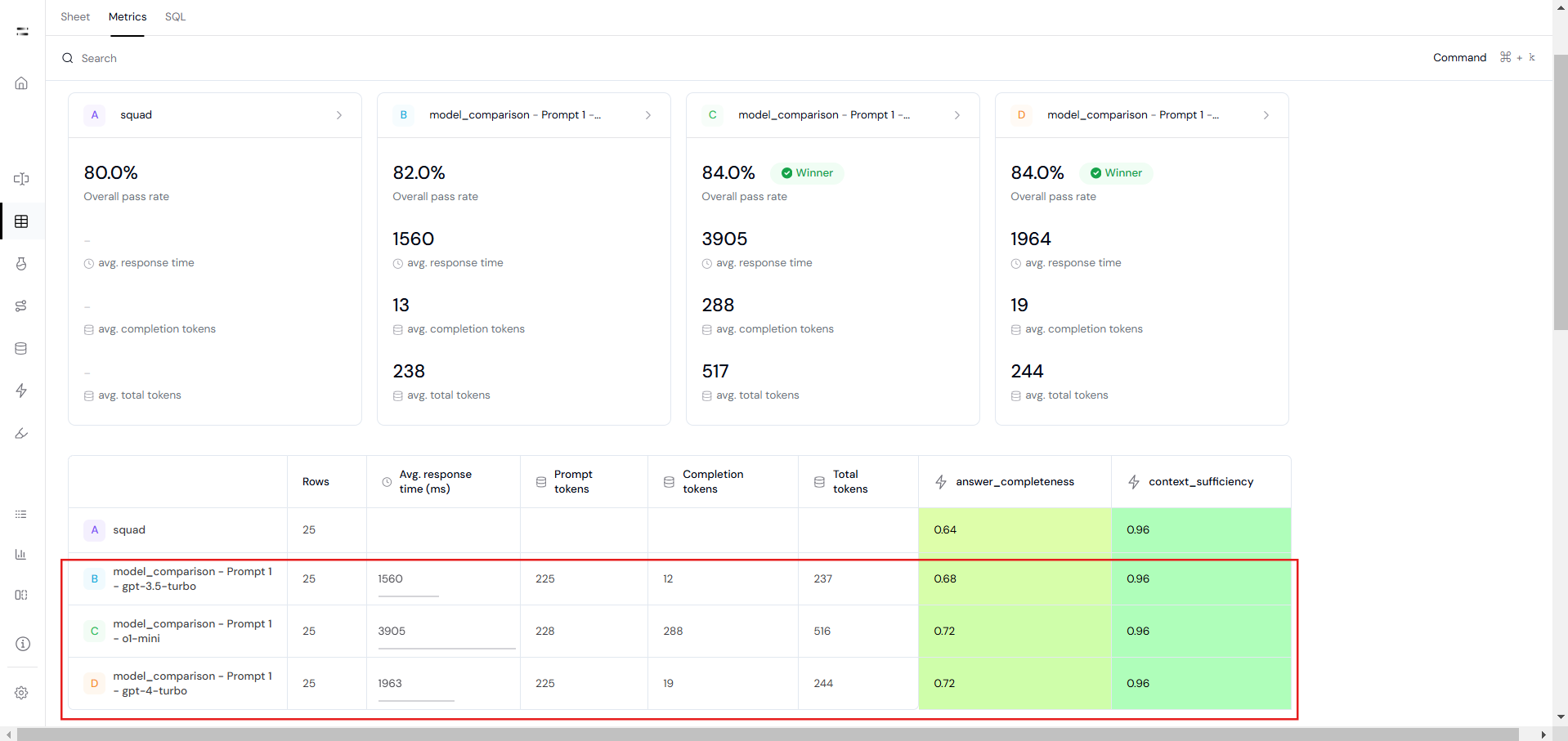

Step 3: Metrics Comparison

Using the insights gathered from the Metrics section, you can identify the model that best aligns with your use case. Strive for a balance between performance, cost, and speed to ensure the selected model meets your priorities and constraints. The Metrics section provides a clear comparison of performance trends, enabling you to make an informed and confident decision.