Why Use CI/CD for Evaluation?

- Automated Quality Checks: Every time you update a model, modify a prompt, or adjust other settings, Athina Evals automatically validates the changes to ensure consistency and reliability.

- Early Issue Detection: If a model starts producing incorrect, unsafe, or unstructured responses, Athina will catch the problem before deployment, preventing bad outputs from reaching users.

- Scalable and Repeatable Testing: Instead of running manual tests, CI/CD pipelines automate evaluations so they run every time changes are made, ensuring repeatable and reliable quality checks.

- Seamless Integration with GitHub Actions: With GitHub Actions, you can trigger evaluations on every pull request or code push, making model and prompt validation an integral part of your development workflow.

Set Up Evaluations in a CI/CD Pipeline

Now, let’s go through the step-by-step workflow using GitHub Actions to automate evaluations in your CI/CD pipeline.Step 1: Create a GitHub Workflow

Step 2: Create an Evaluation Script

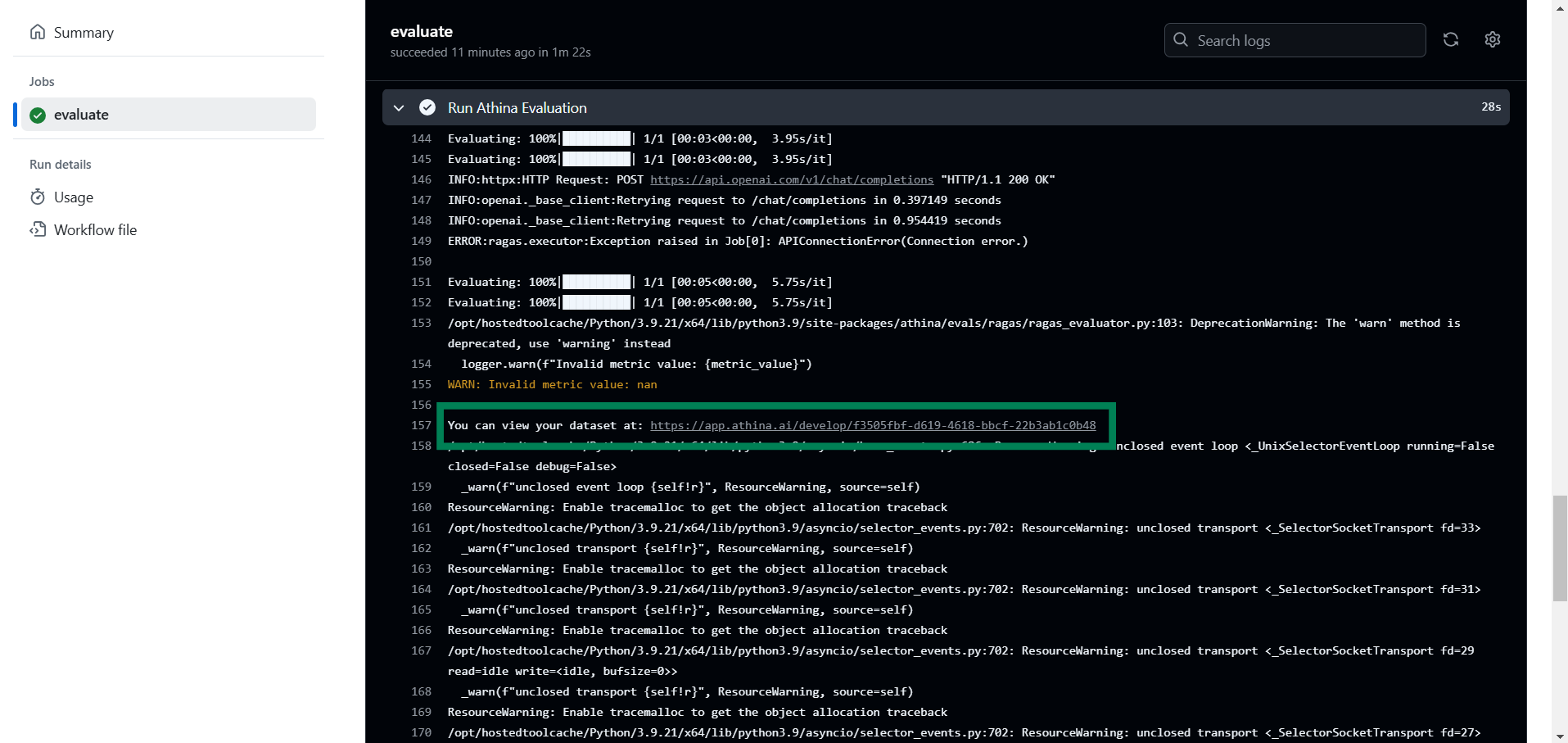

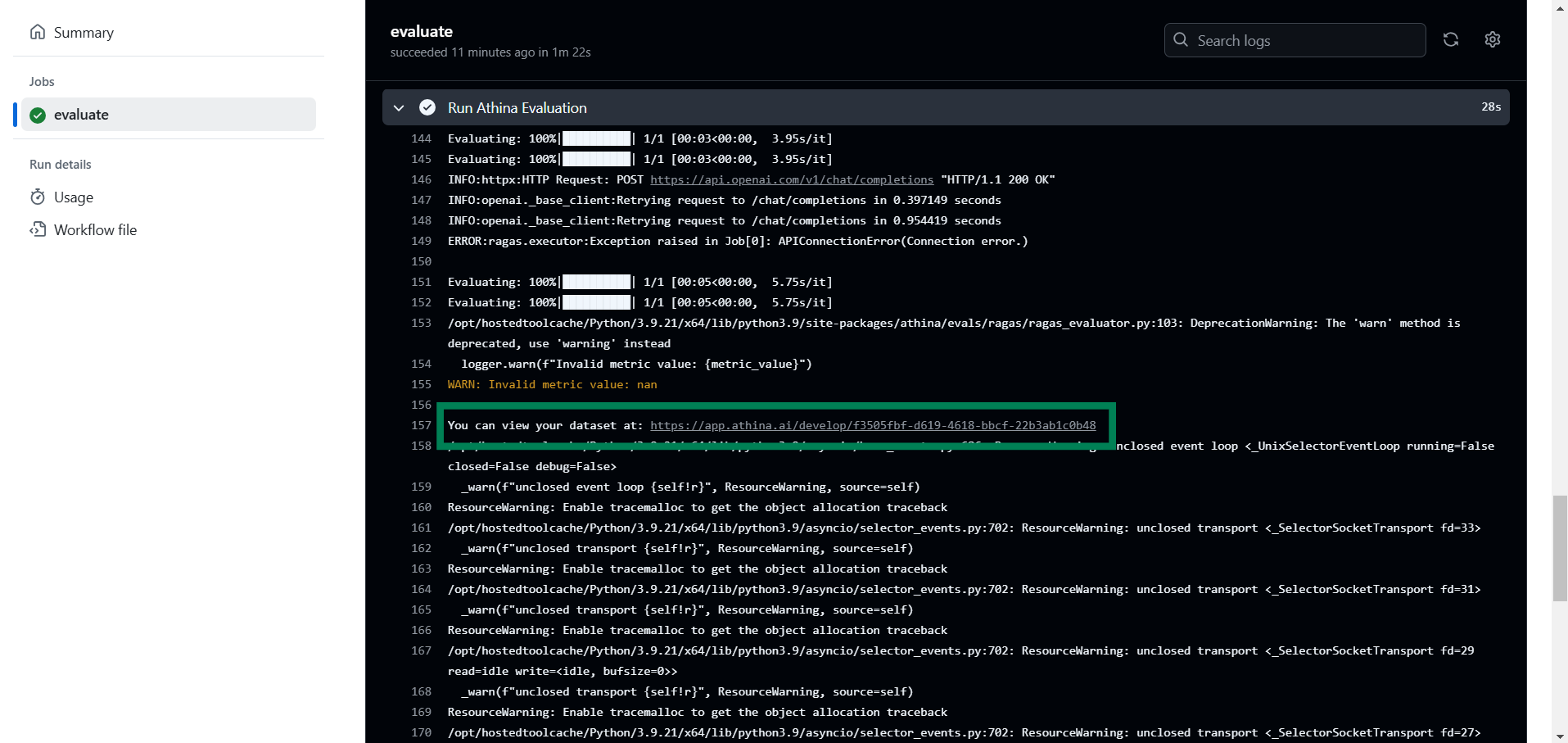

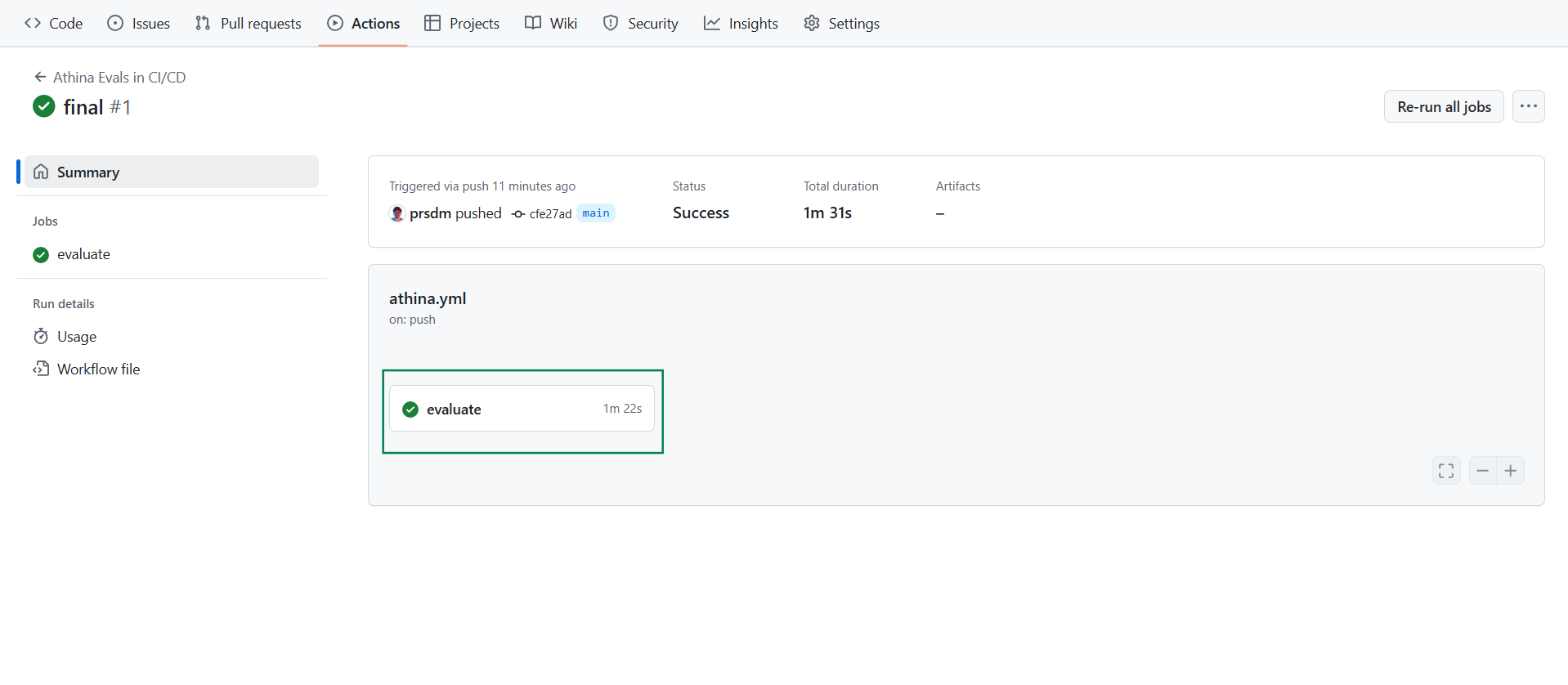

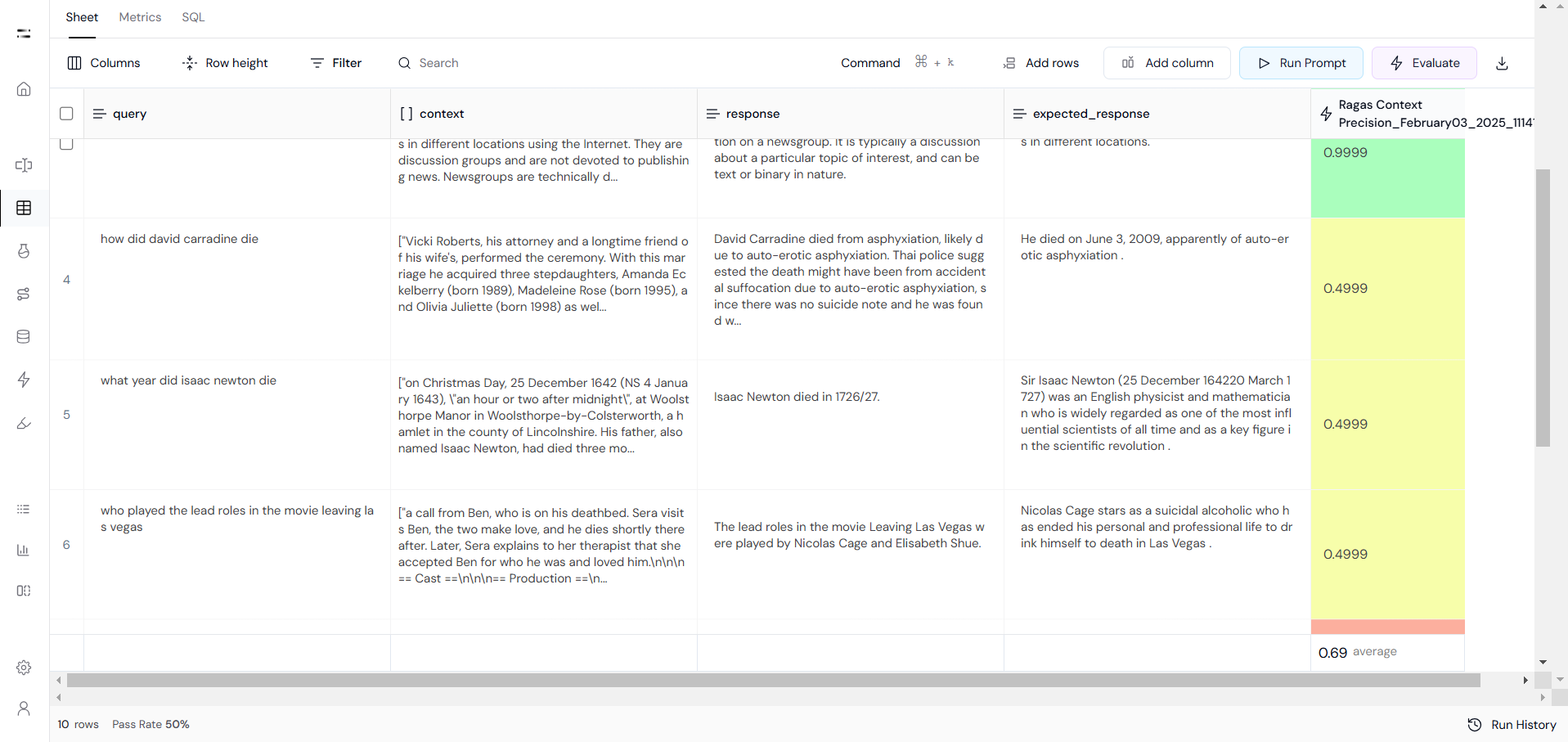

Step 3: Run GitHub Actions

Step 4: Check Results in Athina

Open Athina Datasets to check the logged-in dataset and evaluation results, or click on the link in the GitHub workflow logs to view your dataset and evaluation metrics, as shown in the image.