Evaluating a conversation generated by a Large Language Model (LLM) is essential to ensure that responses are accurate, engaging, and contextually relevant. Unlike traditional LLM evaluation, conversation assessment involves analyzing multi-turn interactions, focusing on response coherence, memory retention, and overall user experience.

In the Athina IDE, you can create custom evaluation criteria to assess conversations using various metrics, ensuring they remain coherent, relevant, and safe.

Why Do We Need to Evaluate Conversations?

- Enhancing User Experience: By analyzing interactions, developers can identify areas where conversations may cause user frustration or confusion. This insight allows for adjustments that make dialogues more intuitive and engaging, leading to higher user satisfaction.

- Ensuring Accuracy and Reliability: Regular evaluation helps assess the ability of conversations to provide correct and relevant information. This is particularly crucial in sensitive domains like healthcare or finance, where misinformation can have serious consequences.

- Maintaining Ethical Standards: Continuous assessment ensures that conversations adhere to ethical guidelines, avoiding inappropriate or harmful exchanges. This is vital to prevent scenarios where discussions might inadvertently suggest dangerous actions or provide misleading advice.

- Improving Conversational Abilities: Evaluations can reveal limitations in natural language flow, allowing for targeted improvements. This leads to more natural and effective communication, enhancing the overall impact of the conversation.

Now, let’s go through the step-by-step evaluation process using custom evaluations in Athina Datasets.

Evaluate Conversations in Datasets

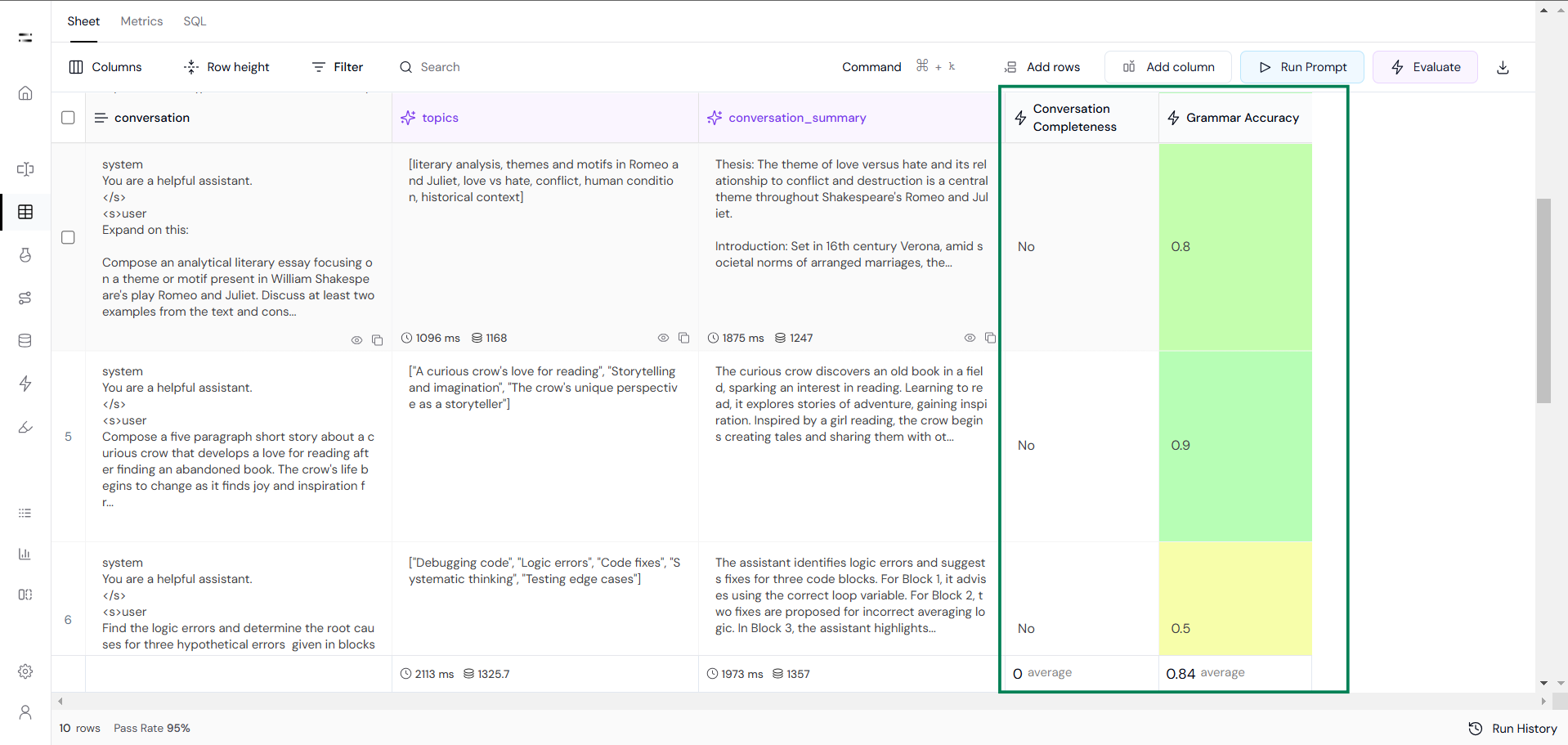

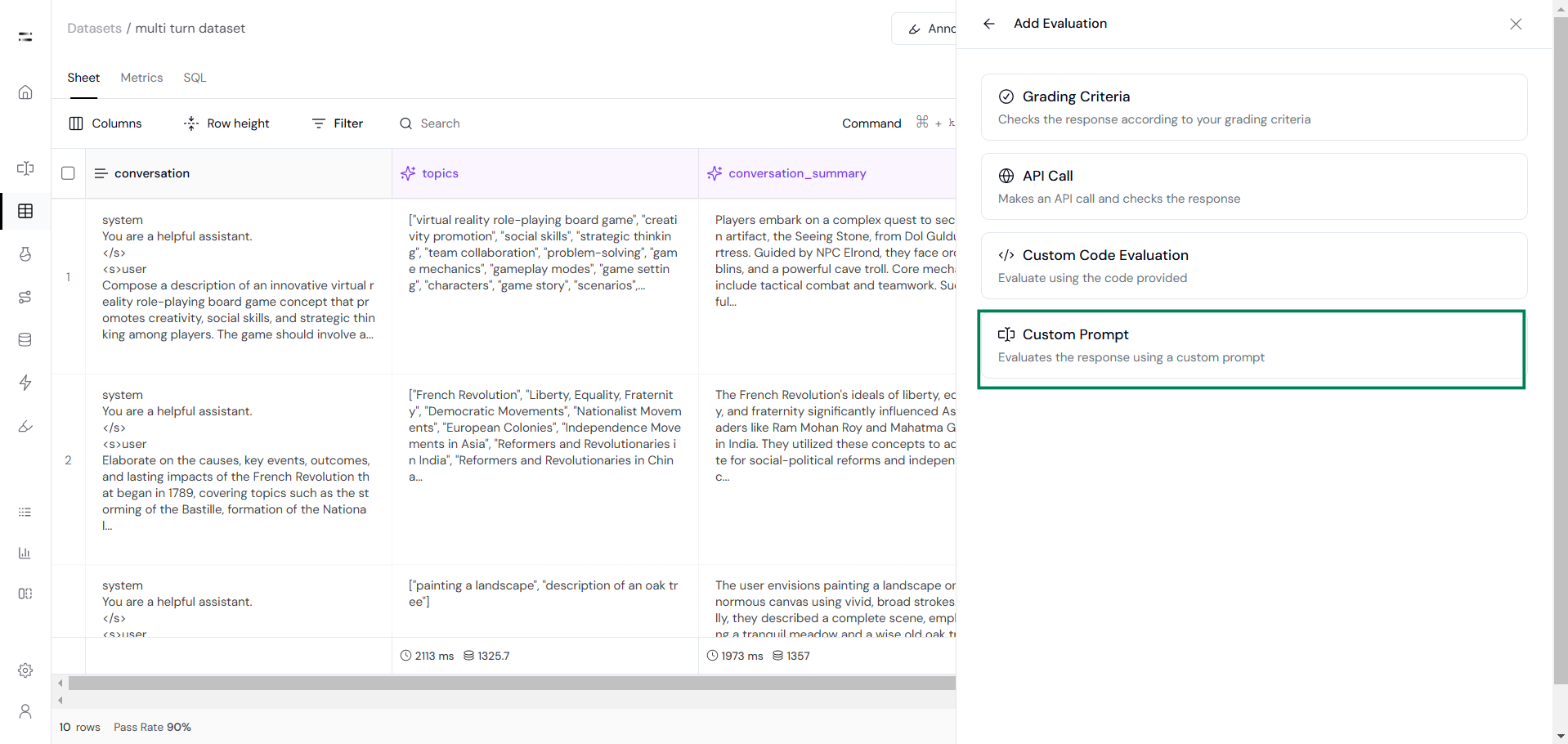

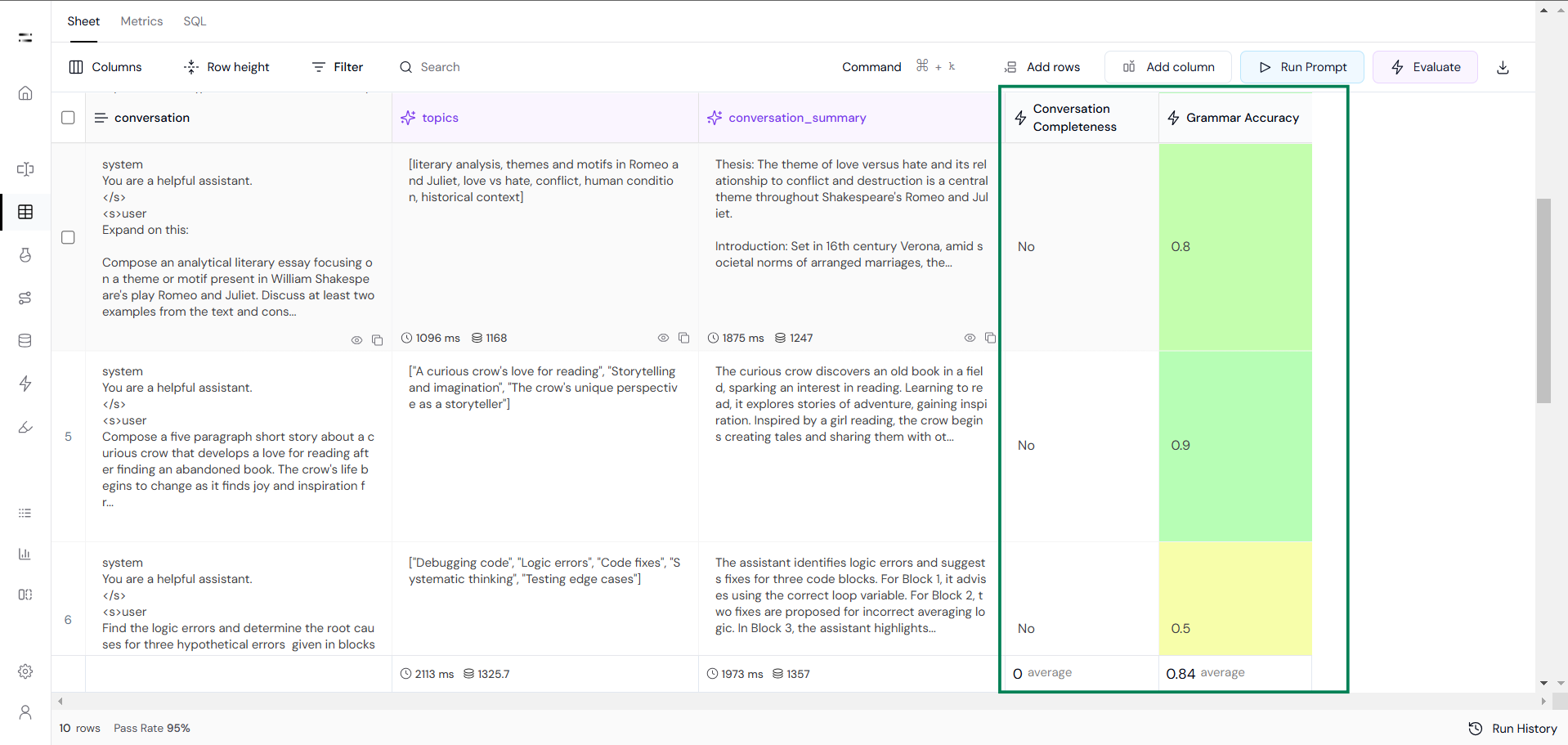

Here we are using the TinyPixel/multiturn dataset from hugging face and we will create a Conversation Completeness custom eval to evaluate the conversation.

You can also use preset templates such as conversation coherence and safety evals such as Harmfulness and Maliciousness etc. to evaluate your conversation.

Step 1: Create custom Evals

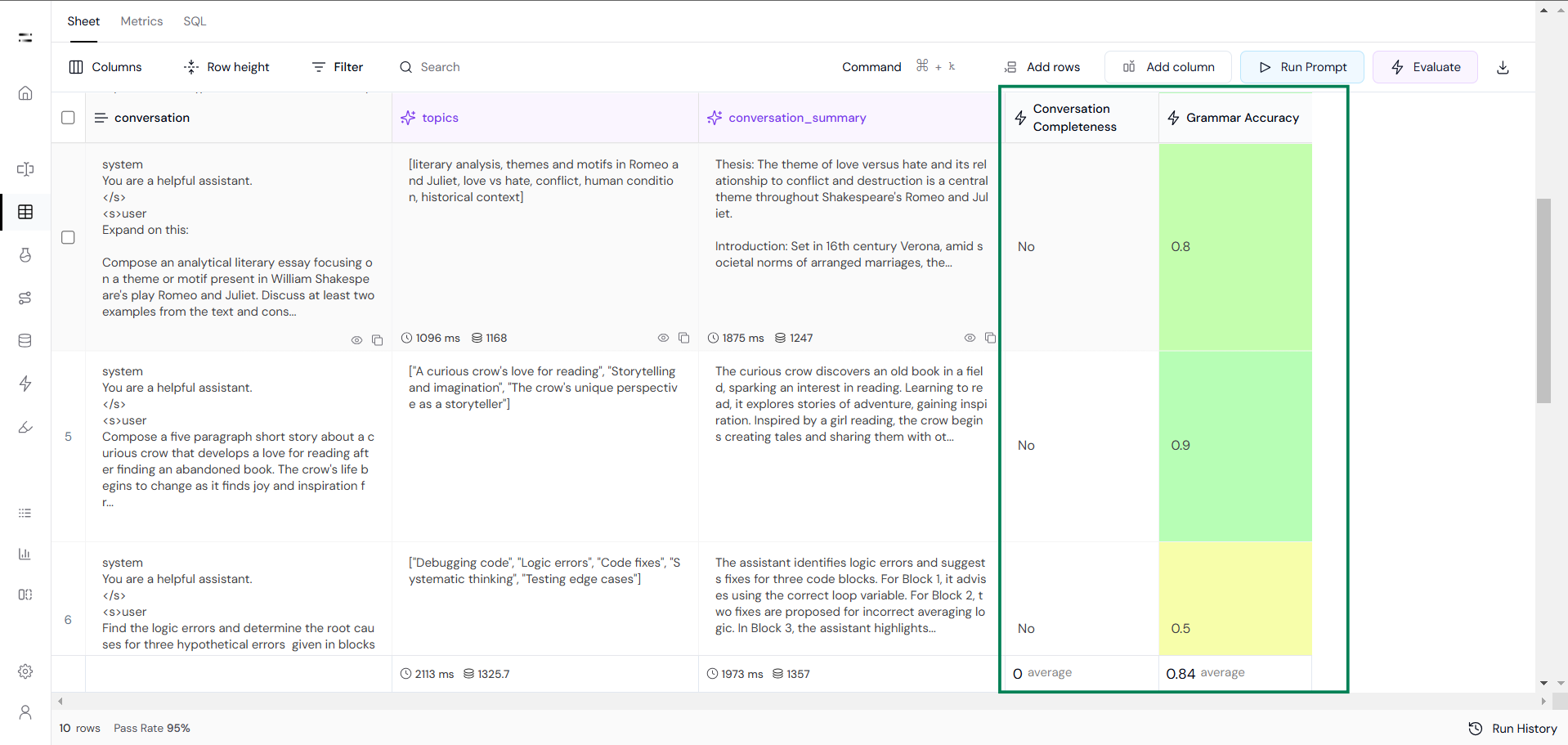

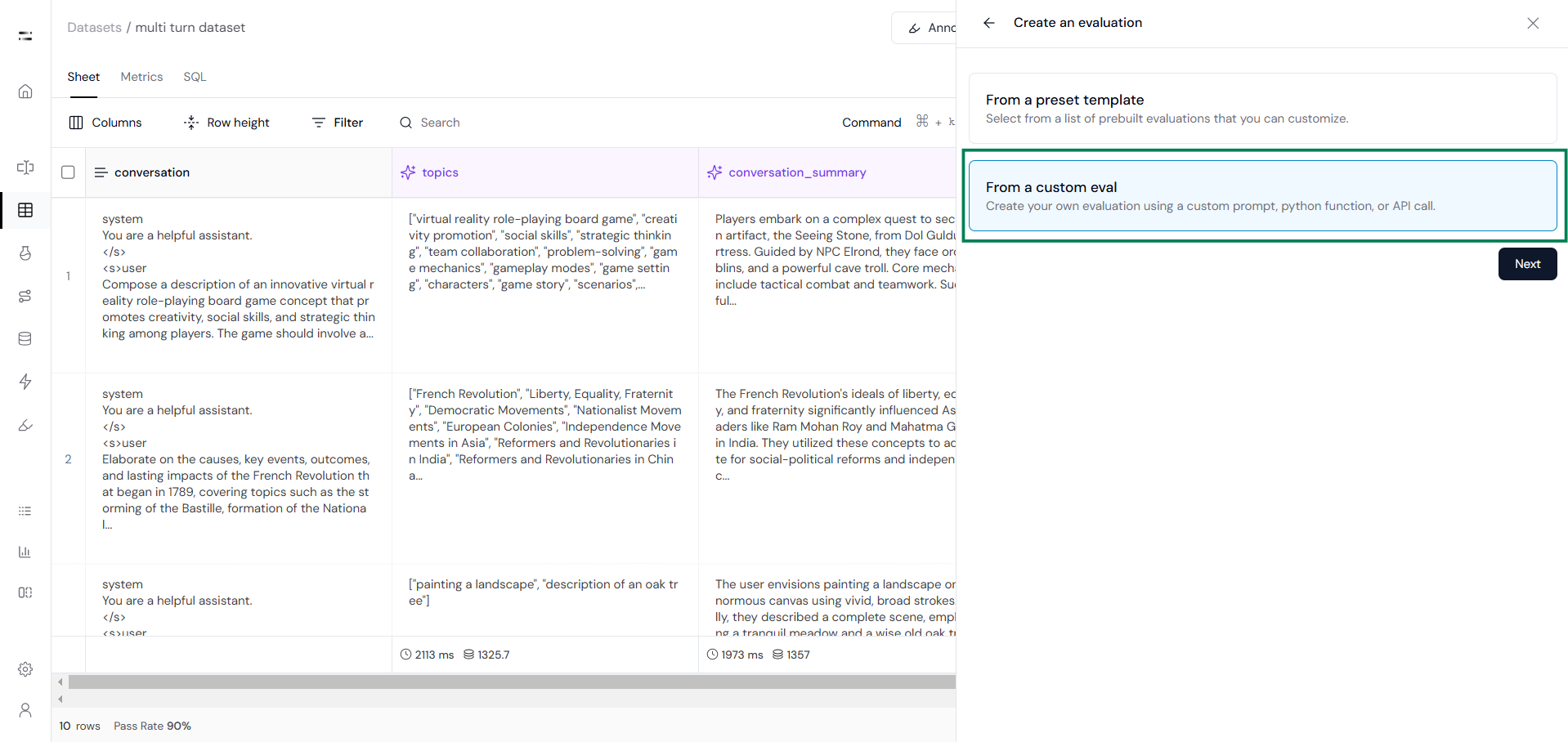

First, click on the Evaluate, then select Create New Evaluation and choose the Custom Eval option.

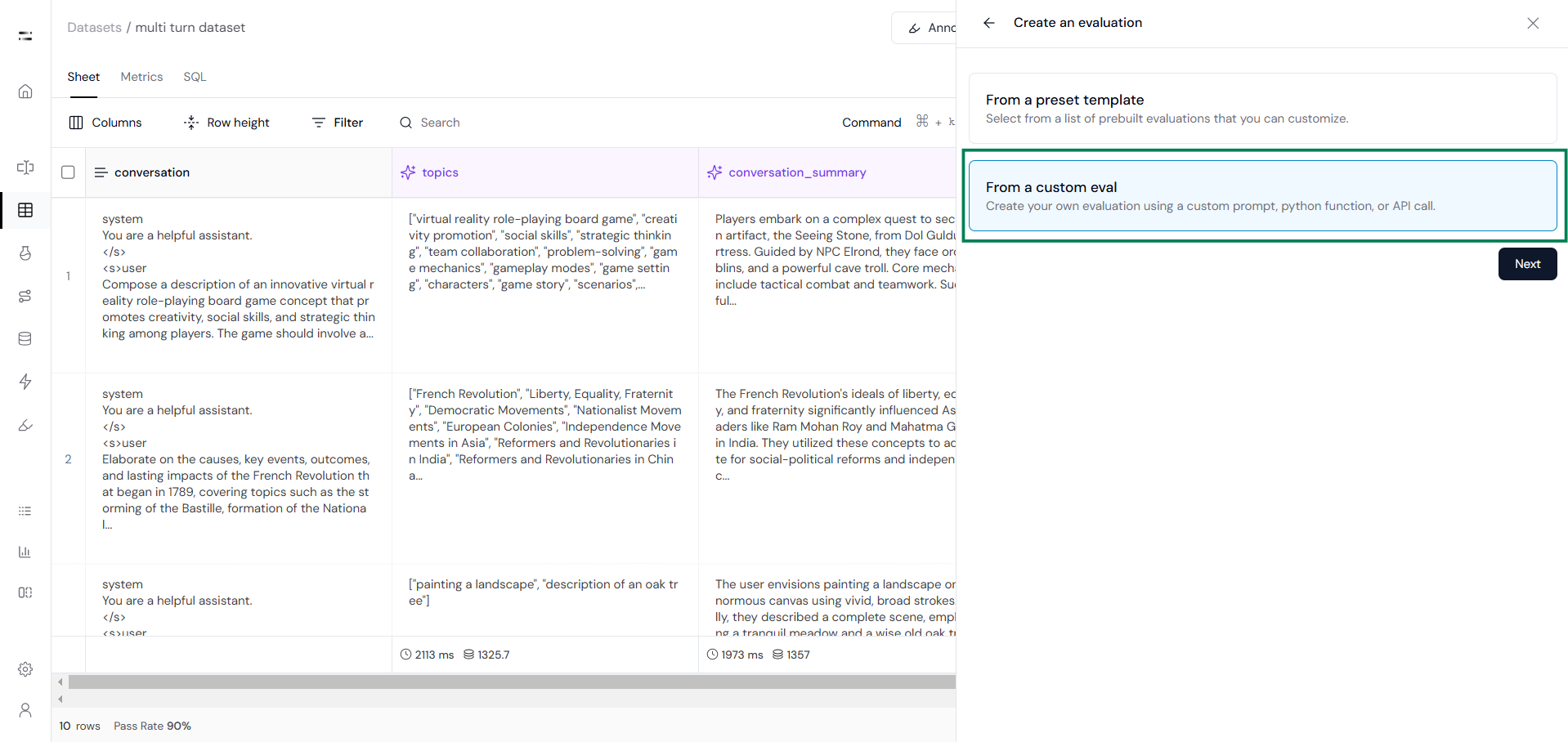

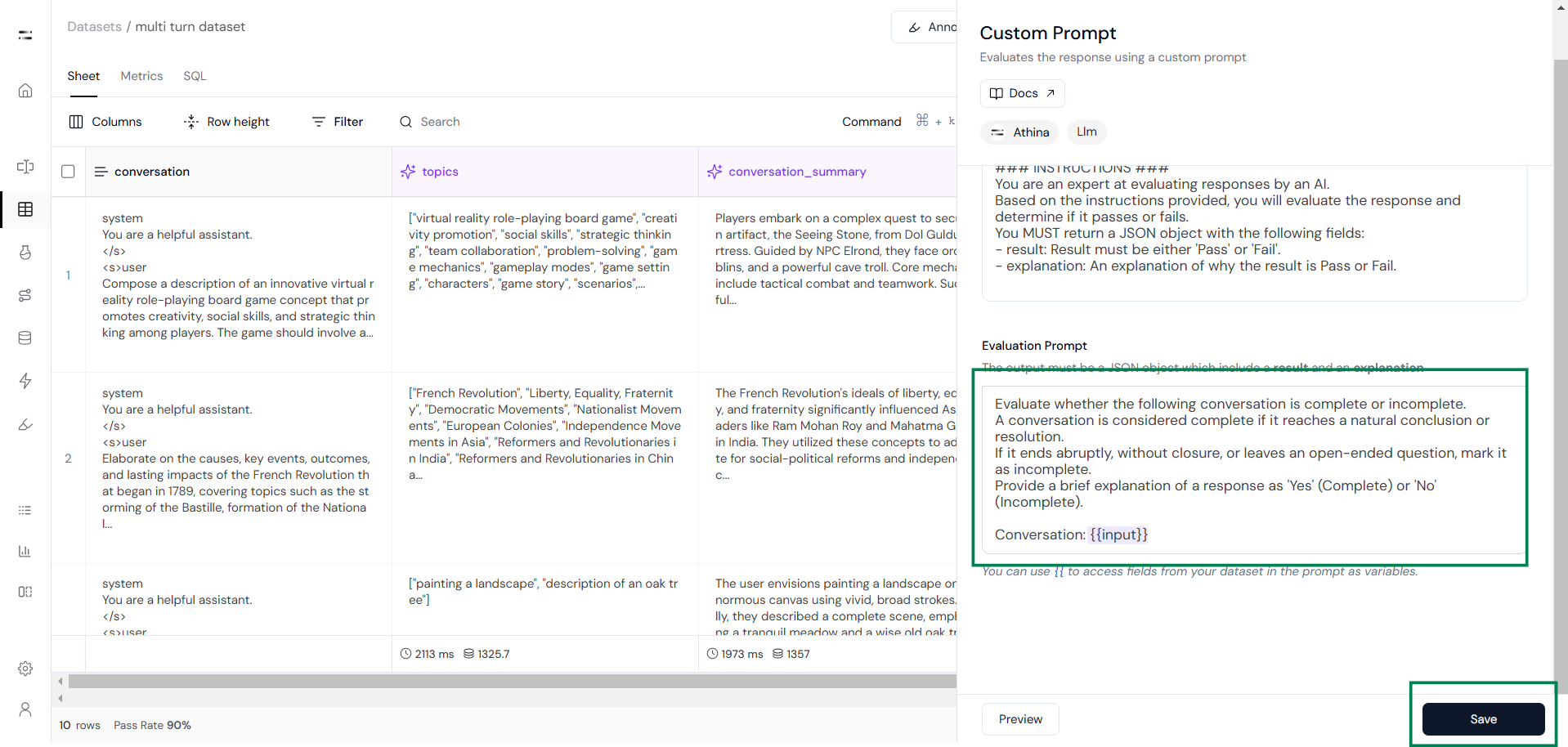

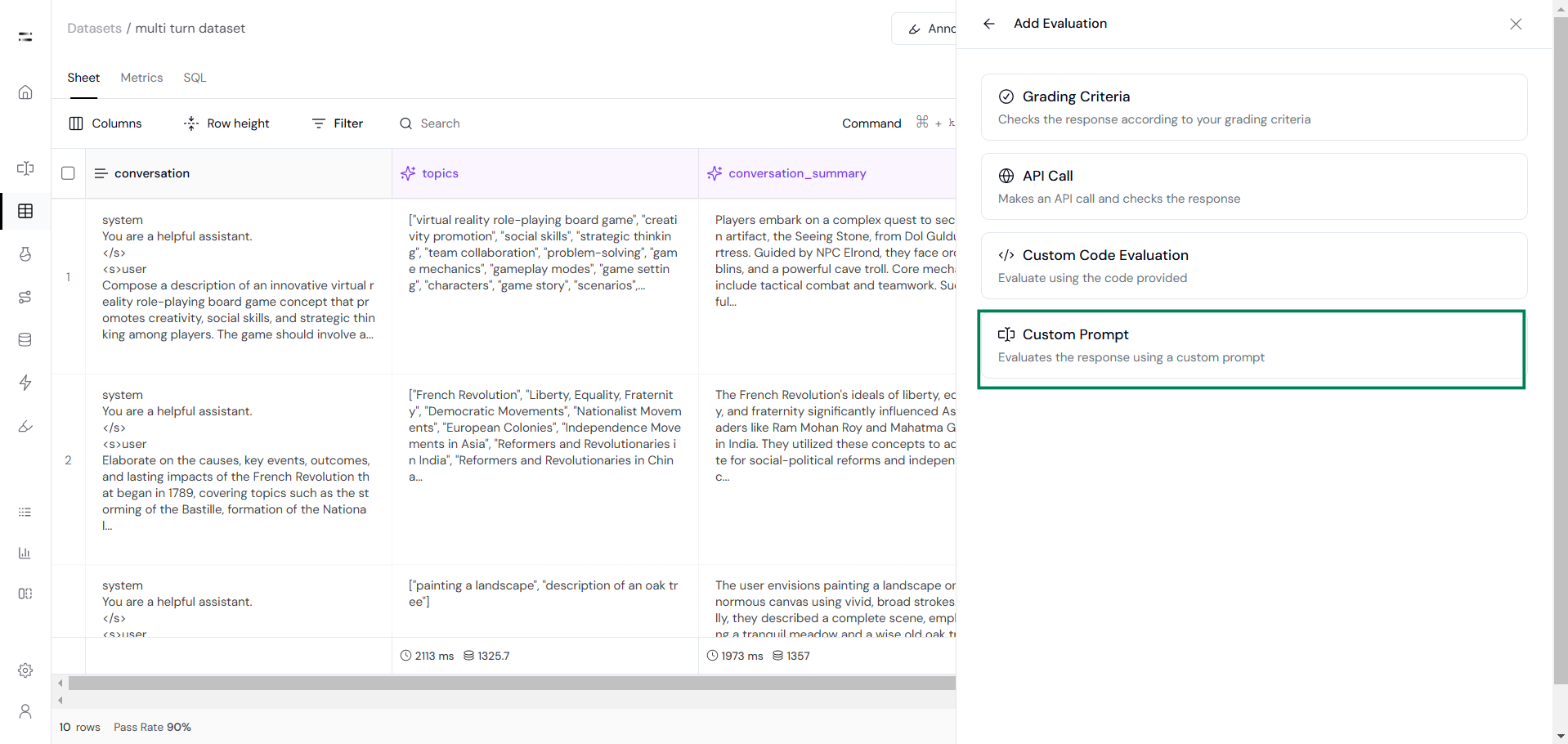

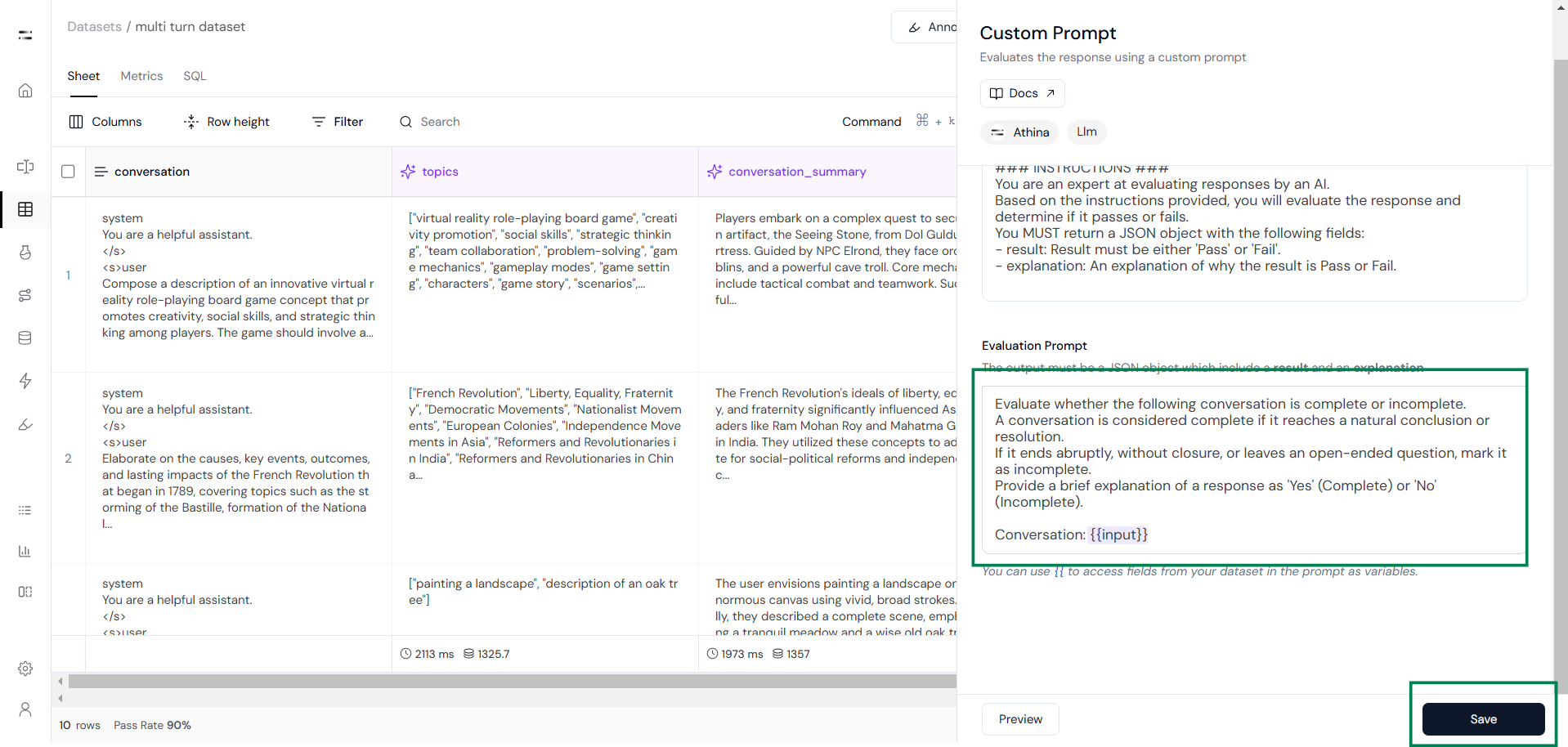

Then click on the custom prompt option as you can see below:

After that add the Conversation Completeness evaluation prompt just like the following example.Evaluate whether the following conversation is complete or incomplete.

A conversation is considered complete if it reaches a natural conclusion or resolution.

If it ends abruptly, without closure, or leaves an open-ended question, mark it as incomplete.

Provide a response as either 'Yes' (Complete) or 'No' (Incomplete), with a brief explanation.

Conversation: {{input}}

Similarly, add the Grammar Accuracy evaluation prompt:Evaluate the grammatical correctness, sentence structure, and fluency of the following conversation.

Identify any grammatical mistakes, awkward phrasings, or inconsistencies.

Provide a score (0-1) along with suggested corrections and explanations.

Conversation: {{input}}

Step 2: Run the Evaluation

Then, run the evaluations to check whether the conversation is complete or incomplete and to assess grammar accuracy based on the defined criteria.

After the evaluations are complete, go to the Metrics section to review the results.