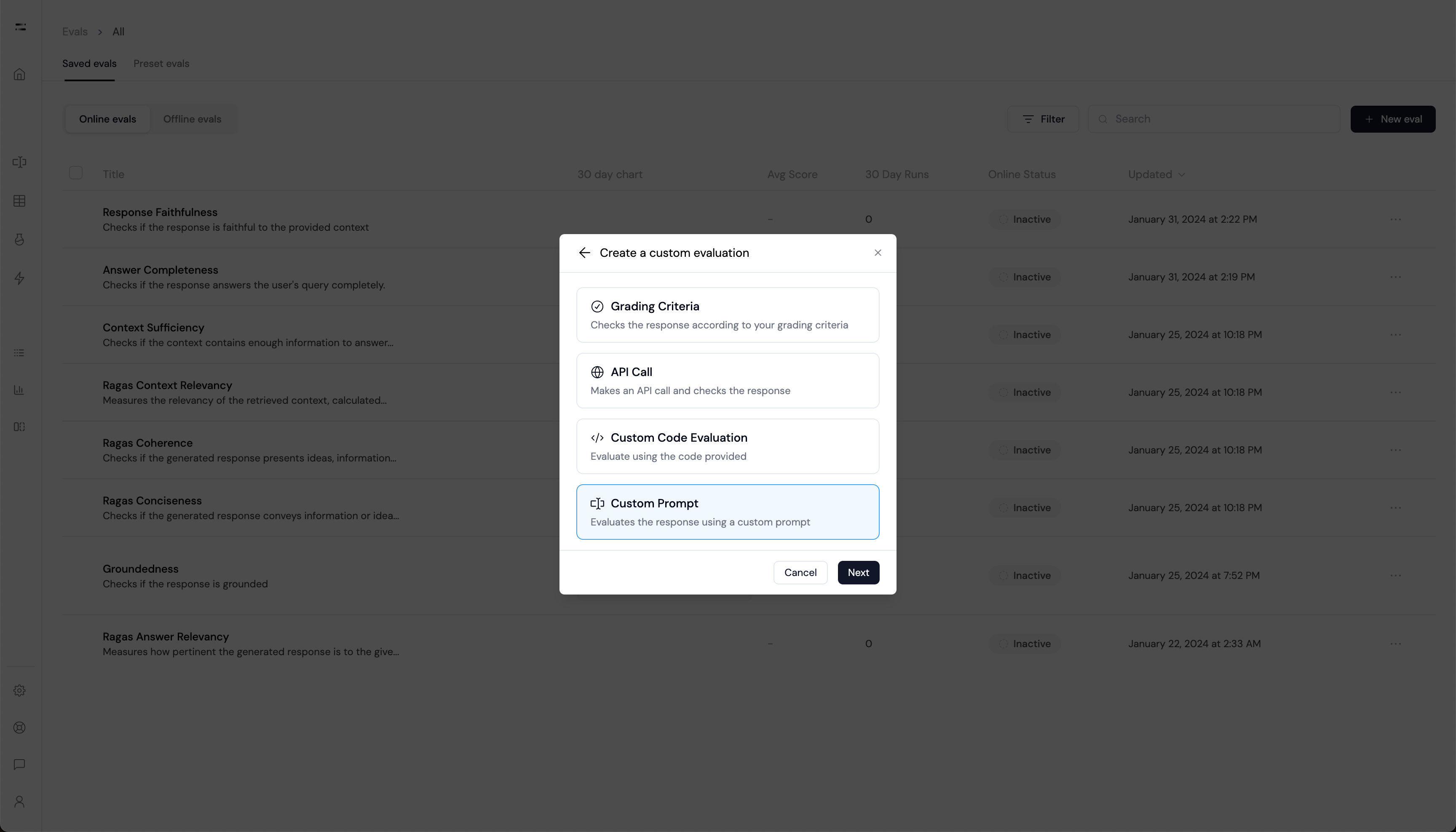

Create Custom Evals in the UI

You can also create custom evaluators. See here for more information.

Create Custom Evals Programmatically

Grading Criteria

Pass / fail based on a custom criterion. “If X, then fail. Otherwise pass.”

Custom Prompt

Use a completely custom prompt for evaluation.

API Call

Use the

ApiCall evaluator to make a call to a custom endpoint where you are

hosting your evaluation logic.Custom Code

Use the

CustomCodeEval to run your own Python code as an evaluator.Create Your Own

Create your own evaluator by extending the

BaseEvaluator class.