Major Challenges

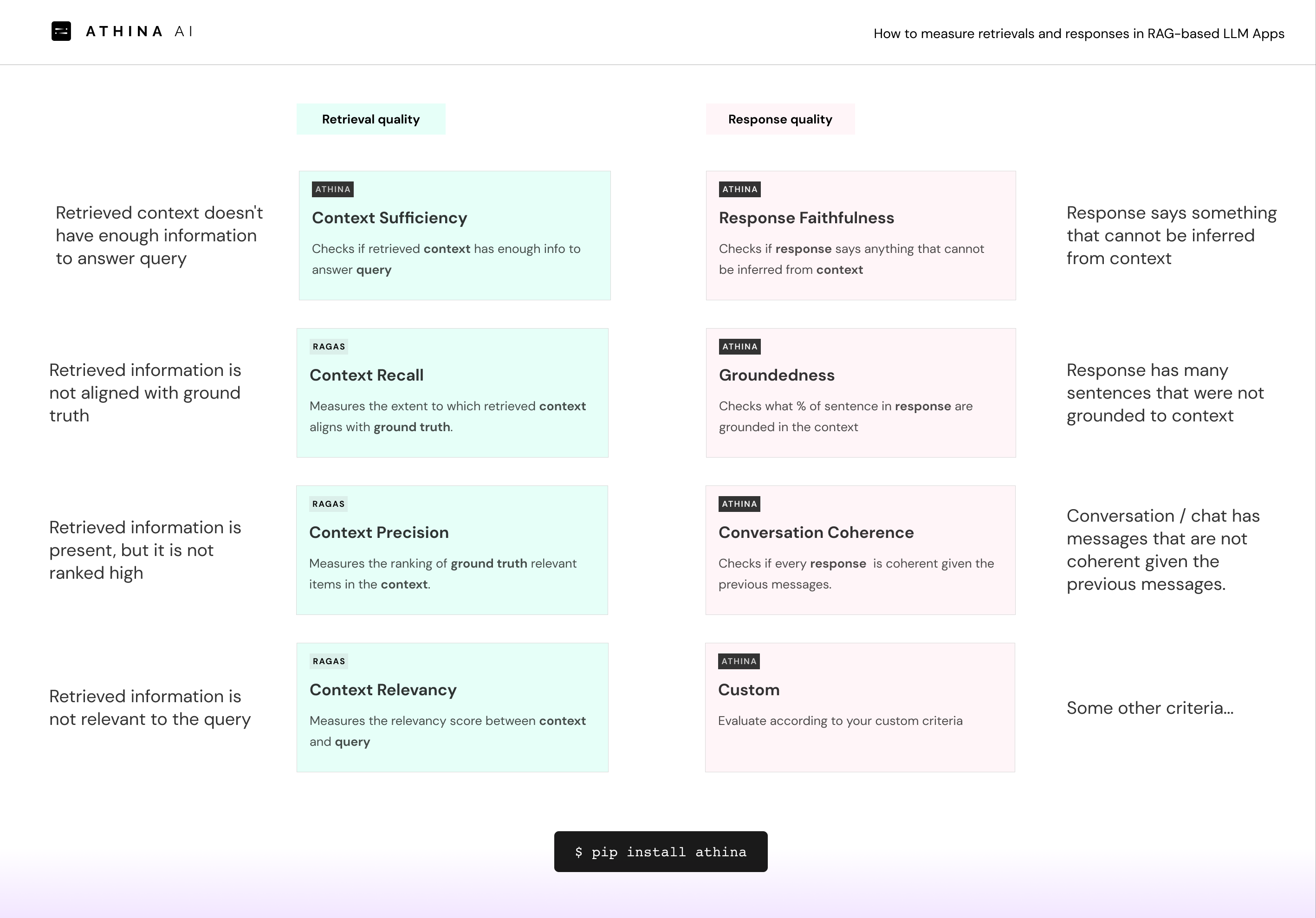

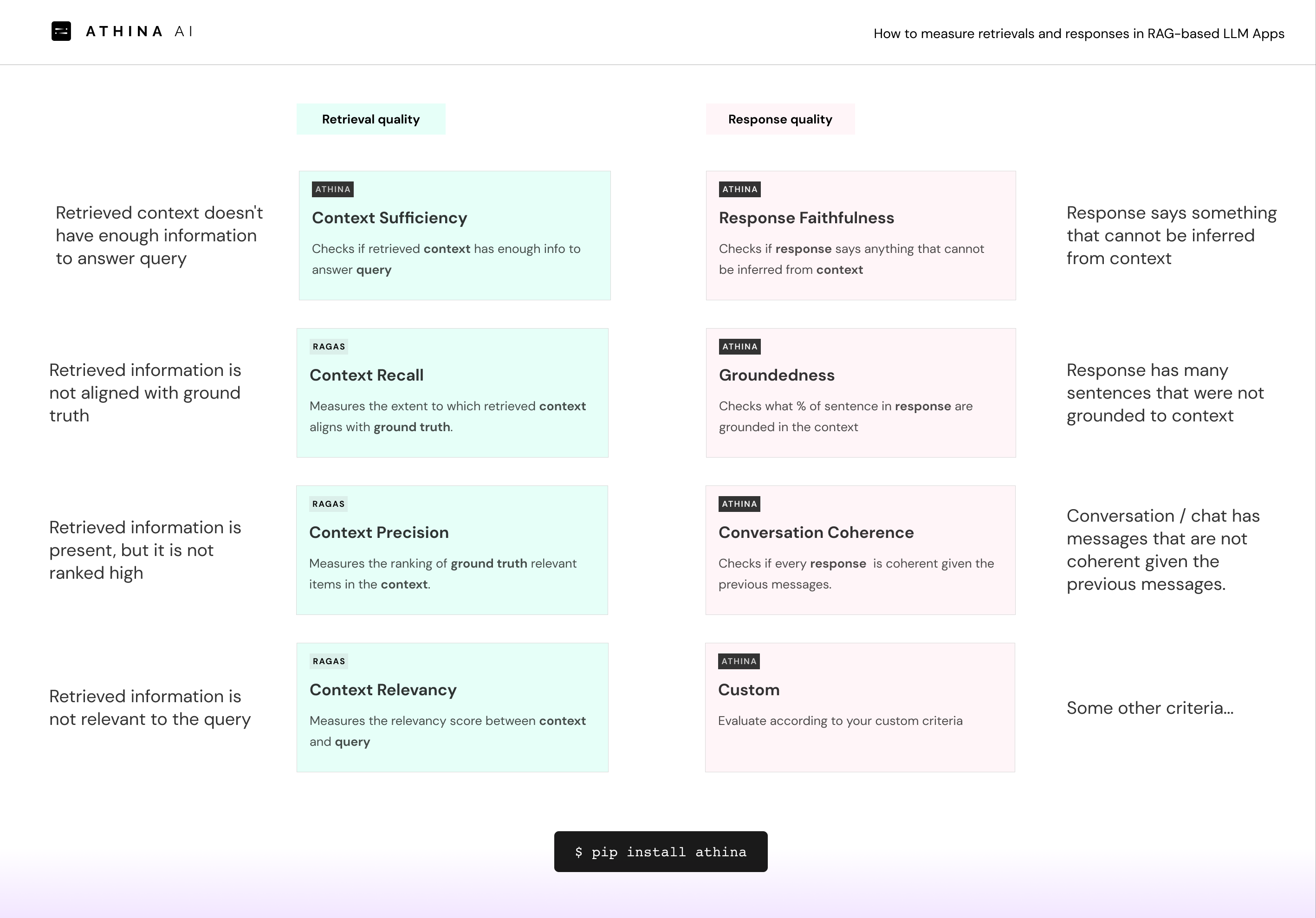

No ground truth in production

No ground truth in production

Unlike your test dataset in development, production logs don’t include any ground truth.Solution:

- You have to use creative techniques (often using another LLM) to evaluate retrievals and responses without ground truth.

- Keep up with the latest and greatest research techniques to add more evaluation metrics / improve reliability

Cost Management

Cost Management

If you need to use an LLM for evaluation, it can get pretty expensive. Imagine running 5-10 evaluations per production log. The evaluation costs could he higher than the actual task costs!Solution: Implement sampling + cost tracking mechanism

Configurability

Configurability

You need:

- a library of evals

- options to configure the Evals

- options for creating custom evals

- swappable models / providers

- integrations with other eval libraries like Ragas, Guardrails, etc

Automation

Automation

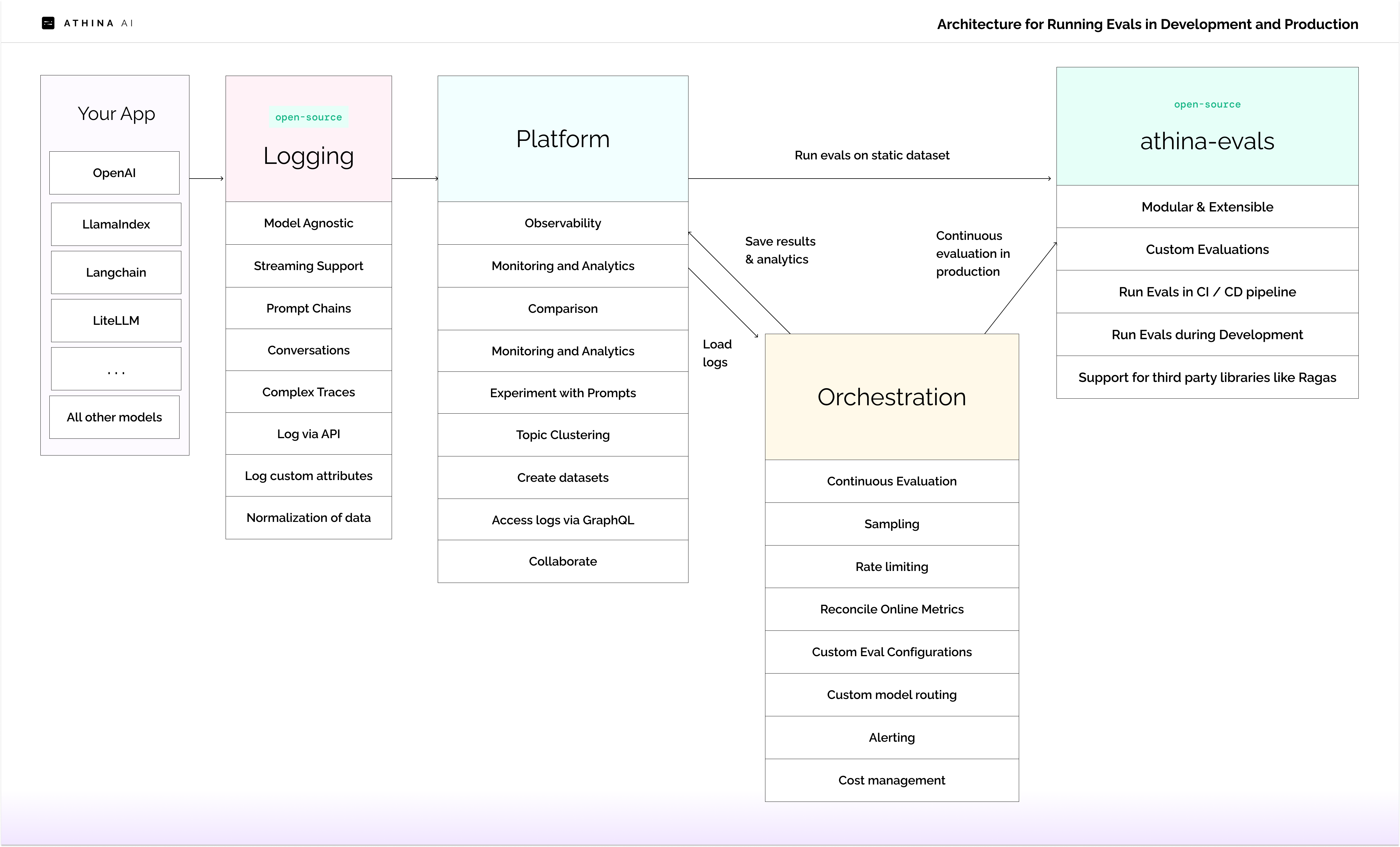

Needless to say, running evals in production should be automated and continuous. That poses a number of challenges at scale.This means:

- You need to scale your evaluation infrastructure to meet your logging throughput

- You need a way to configure evals and store configuration

- You need a way to select which evals should run on which prompts

- You need mechanisms to handle rate limiting

- You need the eval to be run using swappable models / providers

- You need a way to run a newly configured evaluation against old logs

Support for different models, architectures, and traces

Support for different models, architectures, and traces

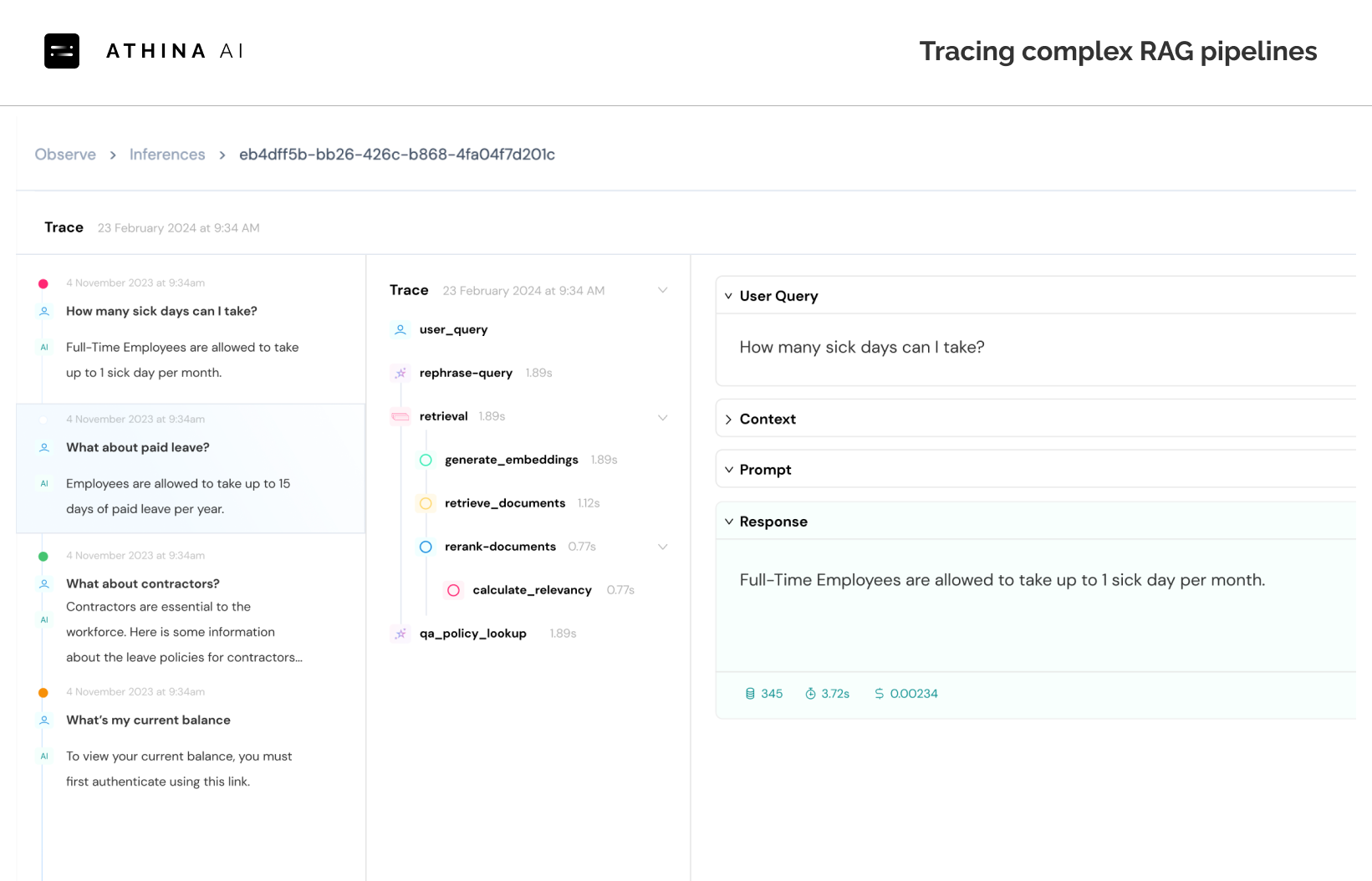

Say your team wants to switch from OpenAI to Gemini.Suppose you add a new step to your LLM pipeline.Maybe you’re building an agent, and need to support complex traces?Maybe you switched from Langchain to Llama Index?Maybe you’re building an chat application and need special evals for that?Can your logging and evaluation infrastructure support this?Solution: You need a normalization layer that is separate from your evaluation infrastructure.

Inspect and debug complex traces and chats

Inspect and debug complex traces and chats

Interpretation & Analytics

Interpretation & Analytics

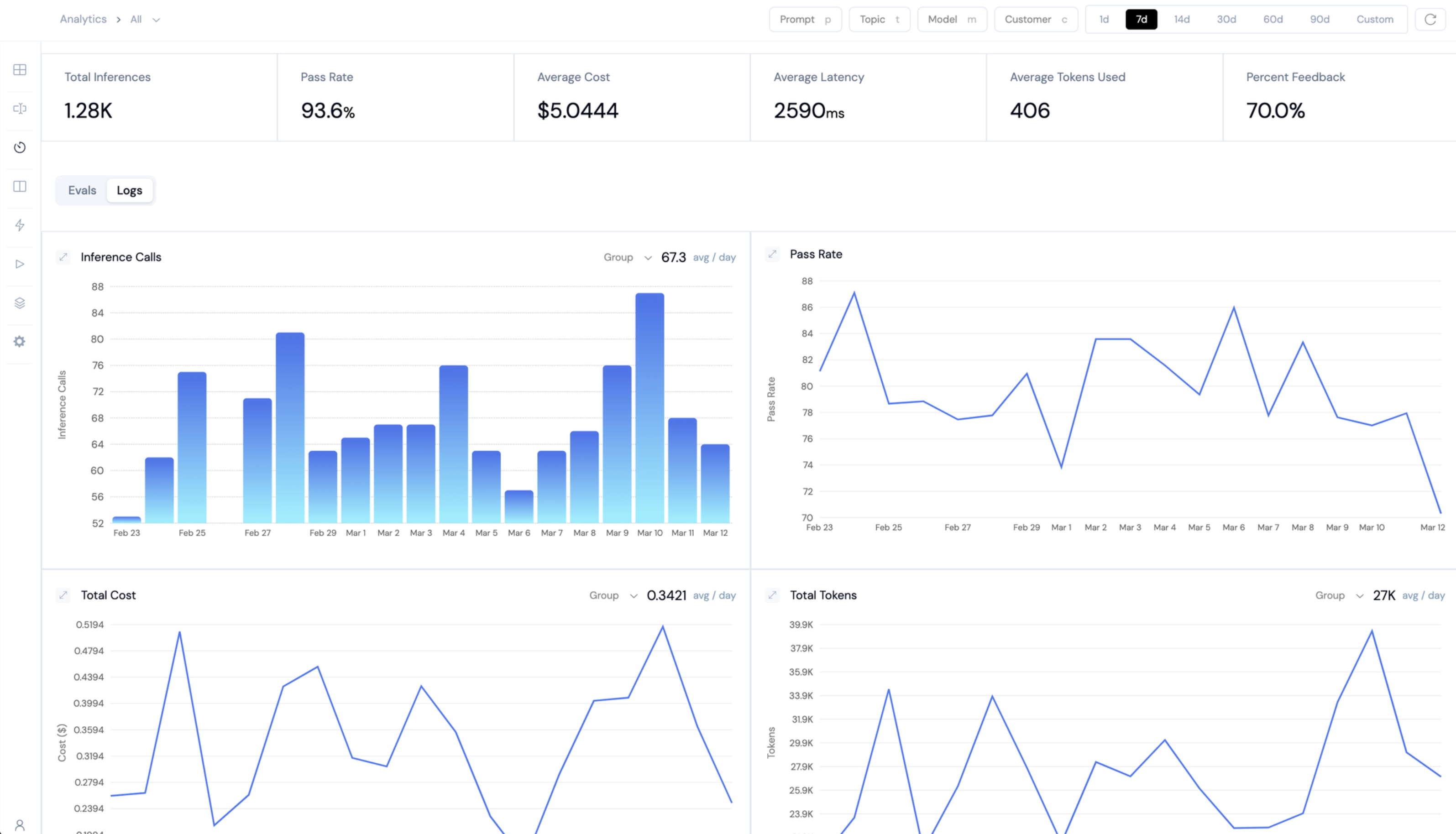

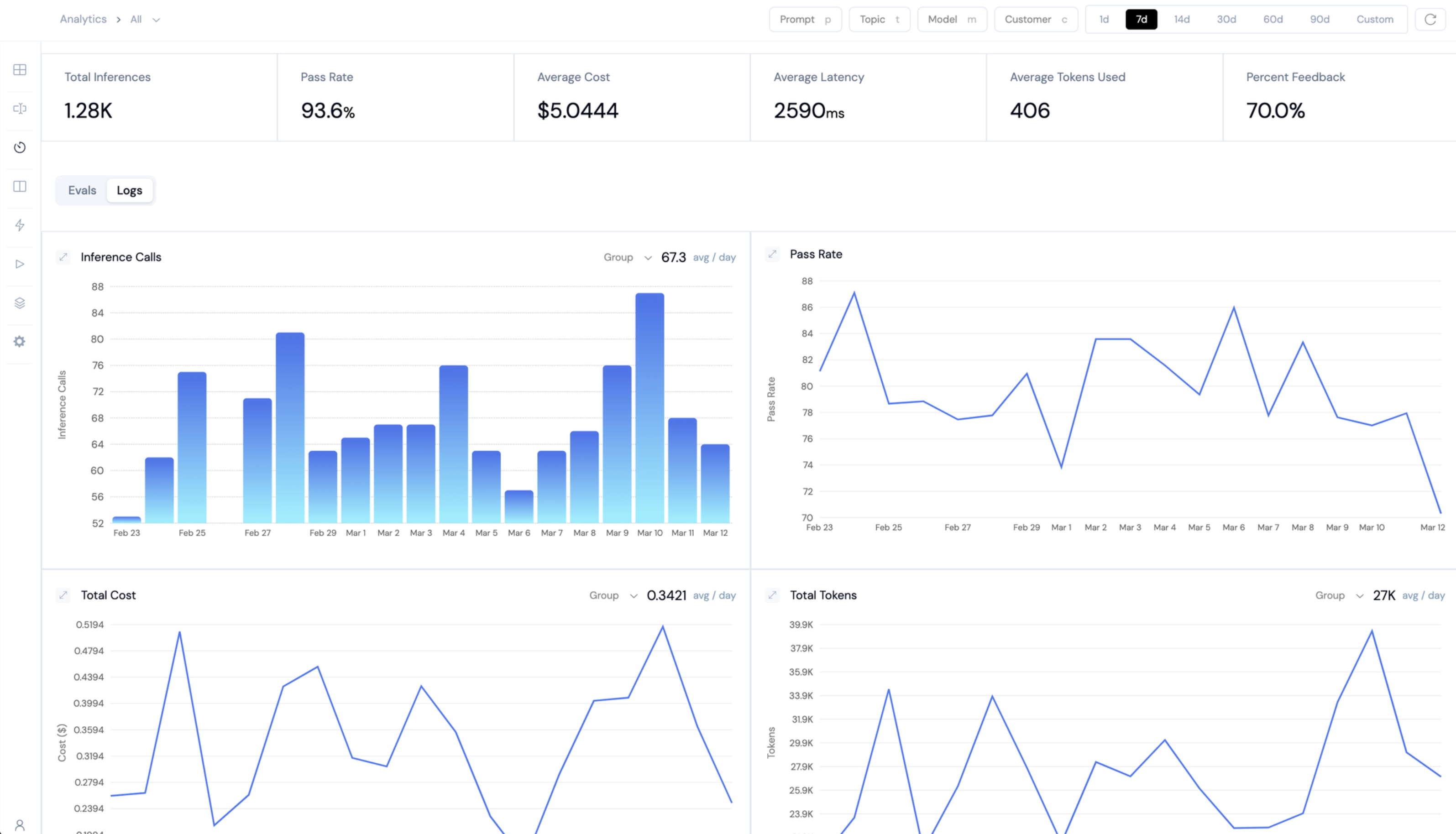

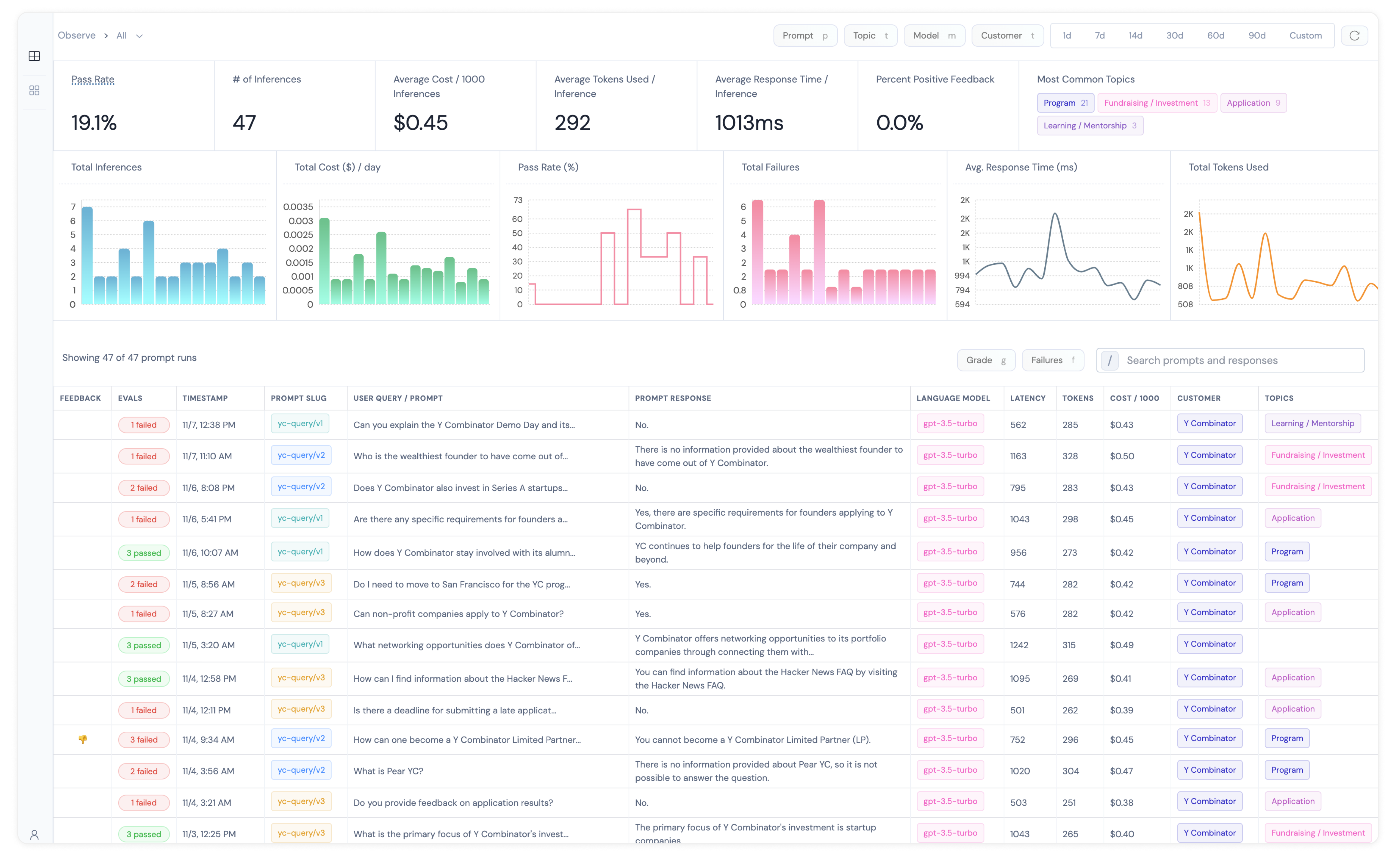

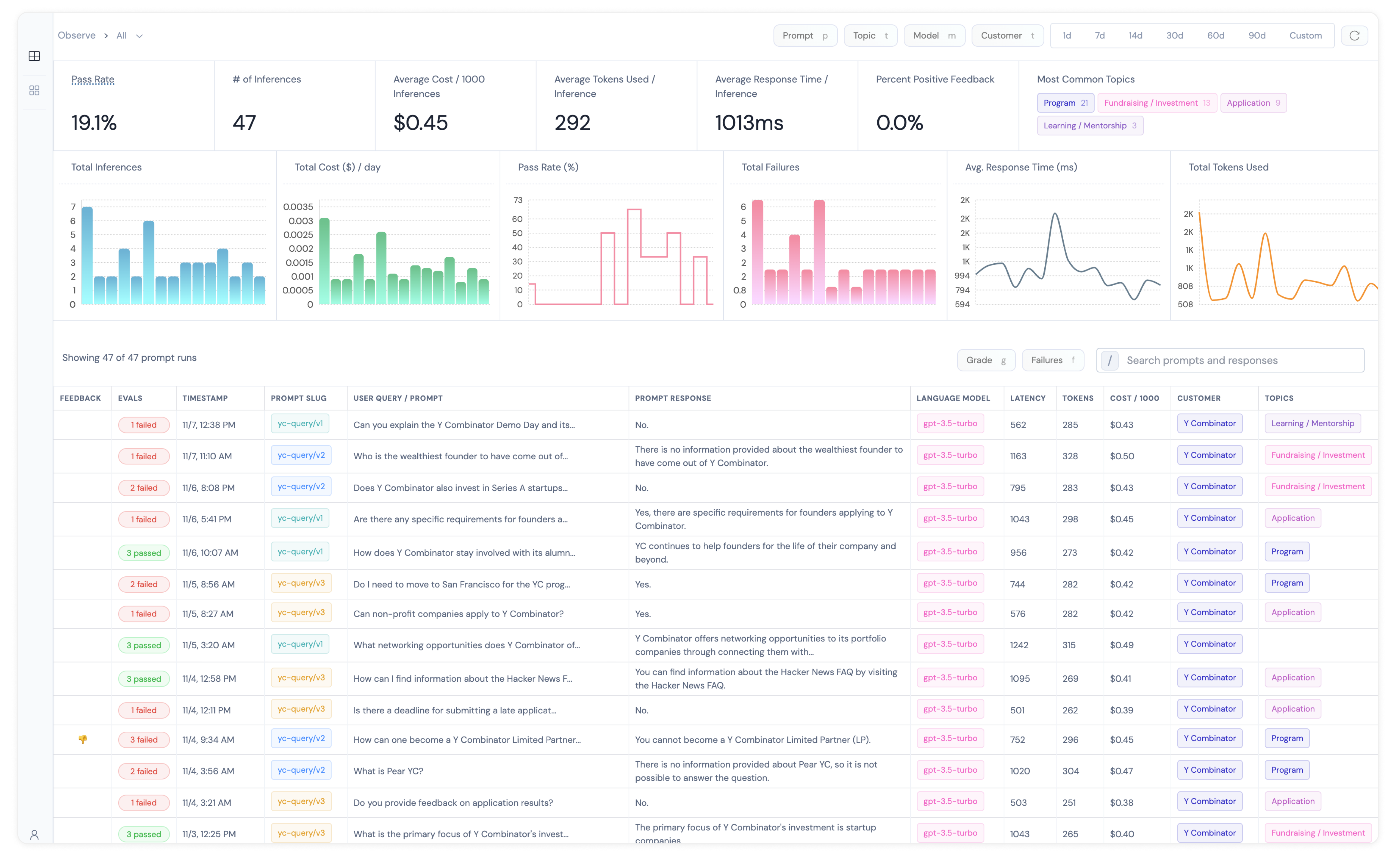

What do you do with the eval metrics that were calculated? Ideally, you want to be able to:

- Measure overall app performance.

- Measure retrieval quality

- Measure usage like token counts, cost, response times

- Measure safety issues like PII leakage or prompt injection attacks.

- Measure changes over time

- Measure distributions of eval scores (p5, p25, p50, p75, p95, etc)

- Segment the metrics by prompt, model, topic or customer ID

Observability & Alerts

Observability & Alerts

Of course, along with all this, you will also want to be able:

- Manually inspect the traces

- Manually annotate the traces individually

- Consolidate online and offline eval metrics

- Configure alerts to PagerDuty or Slack when failures increase

- Export the data

- Connect to the logs via API / GraphQL

Collaboration

Collaboration

The tool you use should also support collaboration features so teammates.Solution: Build team features, access controls and separation of workspaces.